The video discusses a paper by Anthropic that investigates the internal workings of large language models using a method called circuit tracing, which helps analyze how these models achieve tasks like poetry generation and multi-step reasoning. It highlights the emergent capabilities of these models, revealing insights into their internal processing, multilingual understanding, and the influence of training data on their outputs.

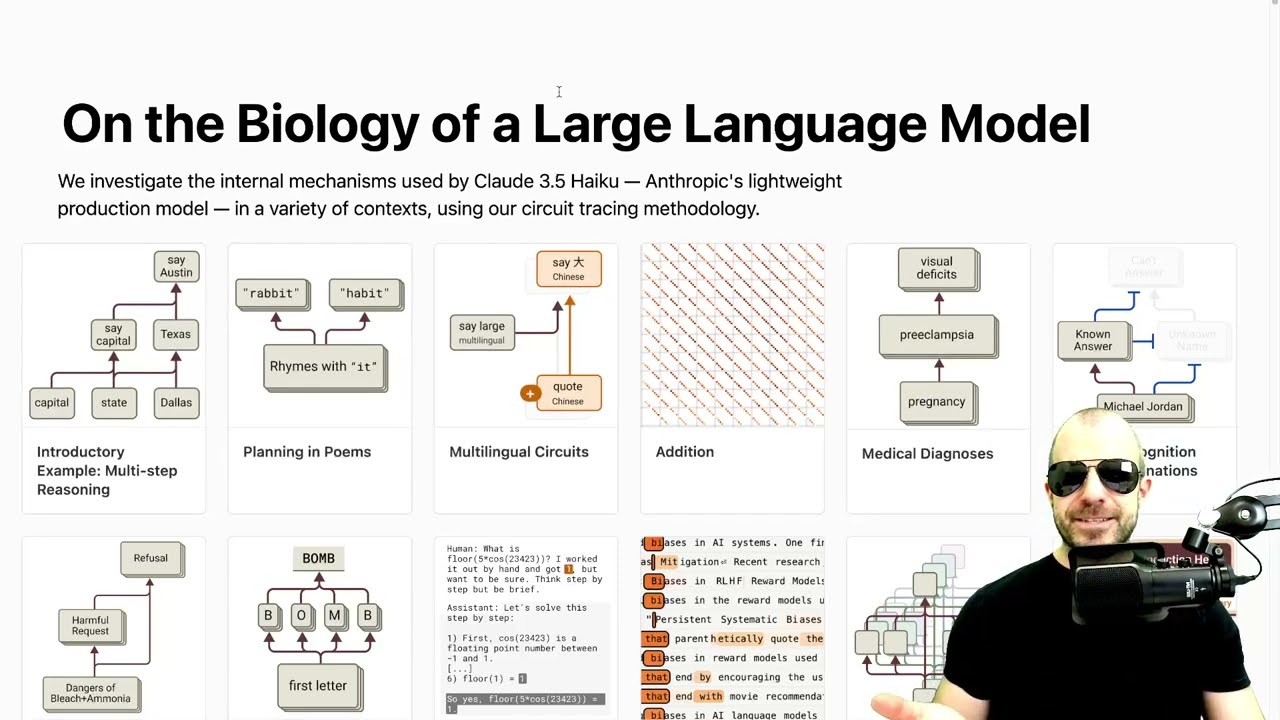

The video discusses a paper by Anthropic titled “On the Biology of a Large Language Model,” which explores the internal workings of transformer language models. The speaker emphasizes that, unlike traditional machine learning models where the mechanisms are well understood, large language models exhibit emergent capabilities that are not explicitly programmed. The paper aims to investigate how these models achieve tasks such as poetry generation, arithmetic, and multilingual understanding by employing a method called circuit tracing, which allows researchers to analyze the features activated within the models.

Circuit tracing involves creating a replacement model, known as a transcoder, that mimics the output of a transformer model while providing more interpretable intermediate signals. This transcoder is designed to match the outputs of each layer of the transformer and is trained with a sparsity penalty to encourage independent feature activation. The resulting attribution graphs illustrate how different features contribute to the model’s outputs, allowing researchers to trace the internal reasoning processes of the model.

The video highlights several examples from the paper, including multi-step reasoning and poetry generation. In the multi-step reasoning example, the model is prompted to determine the capital of the state containing Dallas, which requires recognizing that Dallas is in Texas and then identifying Austin as the capital. The attribution graphs reveal that the model activates features related to both Dallas and Texas, indicating that it internally processes these connections to arrive at the correct answer. This suggests that the model can perform reasoning, albeit with some shortcuts based on statistical associations.

In the poetry generation example, the speaker discusses how the model plans rhymes. The analysis shows that the model activates features related to potential rhymes early in the generation process, indicating that it has the end word in mind before completing the line. This suggests a level of planning in the model’s output, as it considers rhyming words while generating text. The video also touches on intervention experiments that demonstrate how suppressing certain features can alter the model’s outputs, further validating the insights gained from the attribution graphs.

Finally, the video explores the multilingual capabilities of the model, examining whether it processes languages distinctly or through shared features. The findings indicate that while there are language-specific features, many features are language-agnostic, allowing for cross-linguistic understanding. The analysis reveals that the model’s internal processing is more abstract in the middle layers, with language-specific features emerging at the input and output stages. The speaker concludes that while the model exhibits multilingual capabilities, English appears to be privileged in its output, reflecting the model’s training data. The discussion ends with an invitation to join further discussions on the paper in a community setting.