The video discusses Anthropic’s research on understanding large language models through attribution graphs and a cross-layer transcoder, revealing that the models rely on heuristic, parallel pathways and surface correlations rather than explicit reasoning. The speaker critiques the notion that these models possess deep internal understanding, arguing that their behaviors are largely driven by training dynamics and simple feature activations, though the research provides valuable interpretability tools.

The video provides an in-depth analysis of Anthropic’s research on the “biology of a large language model,” focusing on their innovative technique called attribution graphs. Anthropic trained a replacement model, a cross-layer transcoder, to better interpret and analyze the inner workings of the original transformer model. This transcoder introduces cross-layer connections and enforces sparsity, allowing for clearer insights into how features and influences propagate within the model. By matching the original model’s outputs and intermediate representations, the transcoder enables researchers to trace activations and influences more transparently, facilitating a deeper understanding of the model’s internal decision-making processes.

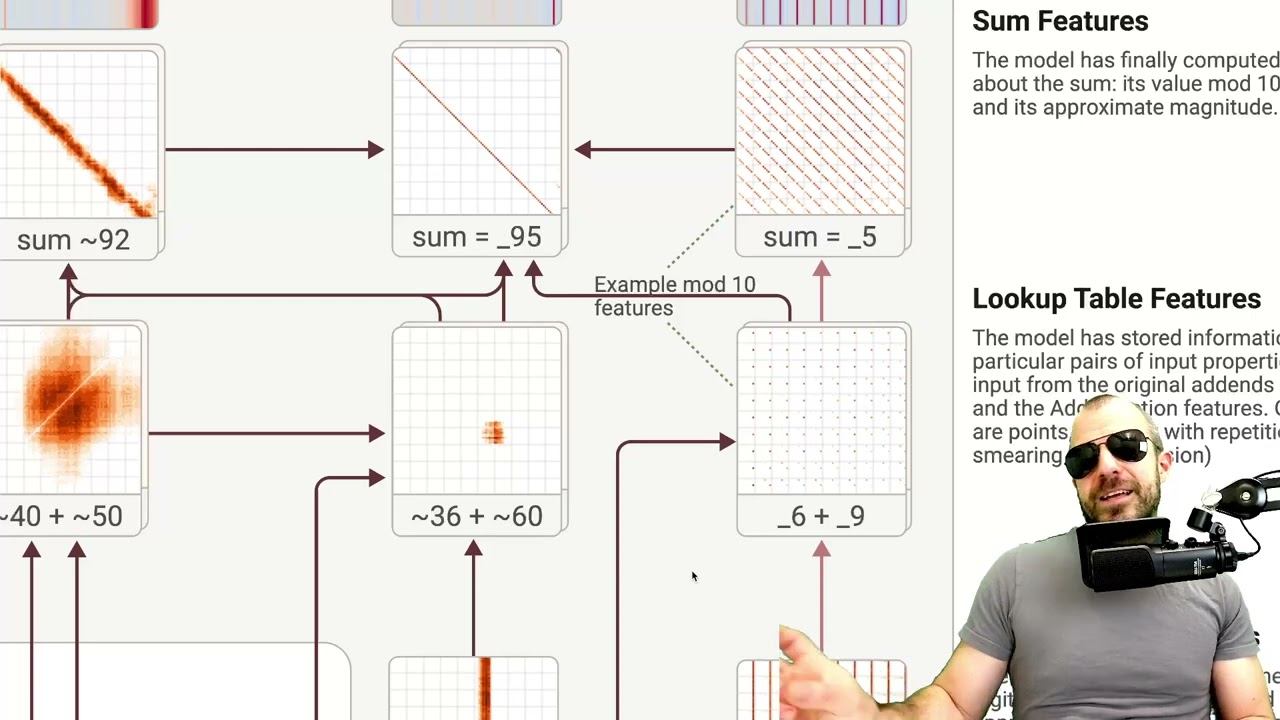

The speaker explores how the model performs tasks like addition, revealing that it activates multiple parallel pathways rather than following a single, explicit algorithm. Features related to the constituent parts of numbers, such as their size or whether they end in a particular digit, are activated simultaneously and influence the final output. The model appears to use approximate, heuristic pathways—sometimes involving modulus calculations—rather than explicit arithmetic operations. This suggests that the model relies on internal features and patterns learned during training, rather than performing step-by-step calculations, raising questions about whether these pathways genuinely reflect the model’s internal reasoning or are artifacts of the interpretability method.

Further, the analysis extends to the model’s ability to perform medical diagnosis and differential reasoning. The researchers find that the model internally develops representations of conditions like pre-eclampsia, activating features that correspond to diagnostic criteria before asking the next question. This indicates that the model forms internal concepts and latent representations akin to human reasoning, rather than simply mapping inputs to outputs. However, the speaker notes limitations imposed by the transformer architecture, such as the finite number of layers, which constrain how deeply and flexibly the model can develop and utilize these internal features.

The discussion then shifts to the model’s tendencies to hallucinate, refuse, or decline to answer certain prompts. Anthropic’s fine-tuning process, which heavily emphasizes safety and refusal to generate harmful content, is shown to be relatively superficial—mainly based on surface-level correlations and simple feature activations. The model learns to associate certain word combinations with refusals, but this is achieved through straightforward likelihood adjustments rather than deep understanding. As a result, refusals often occur only after the model completes a sentence or encounters high-entropy tokens, making jailbreaks and bypasses relatively easy because the underlying mechanisms are simple and surface-level.

In conclusion, the speaker criticizes the overarching narrative that these models are complex, reasoning entities, arguing instead that much of their behavior can be explained by basic training dynamics, surface correlations, and simple feature activations. While acknowledging the thoroughness and value of Anthropic’s research, the speaker expresses skepticism about the claims of deep understanding or safety that are often marketed. They emphasize that training, likelihood adjustments, and surface-level correlations largely drive the models’ behaviors, and caution against overestimating their internal reasoning capabilities. Nonetheless, the research offers valuable tools and insights for future interpretability and safety efforts, encouraging further exploration and critical analysis.