The video discusses the challenges of benchmarking open-source large language models (LLMs) for tasks like math, coding, and long-form writing, highlighting the importance of accurate benchmarking to drive improvements in the industry. It introduces the Open LLM Leaderboard 2 by Hugging Face, which ranks the performance and capabilities of various open-source LLMs with improvements such as normalized scores, a faster interface, and community voting, showcasing top models like Qwen 2-72B Instruct and MetaLama 3B-70B Instruct.

The video discusses the challenges of benchmarking open-source large language models (LLMs) to determine their performance and capabilities in various tasks such as math, coding, and long-form writing. Benchmarking is crucial for understanding the effectiveness of these models compared to closed-source alternatives and for driving improvements in the industry. Running benchmarks for LLMs involves testing them on a variety of tasks, which can be costly and time-consuming due to the need to run inference on numerous prompts. To address the issue of accurate benchmarking, startups are emerging to offer private paid benchmarking services for both open-source and closed-source models.

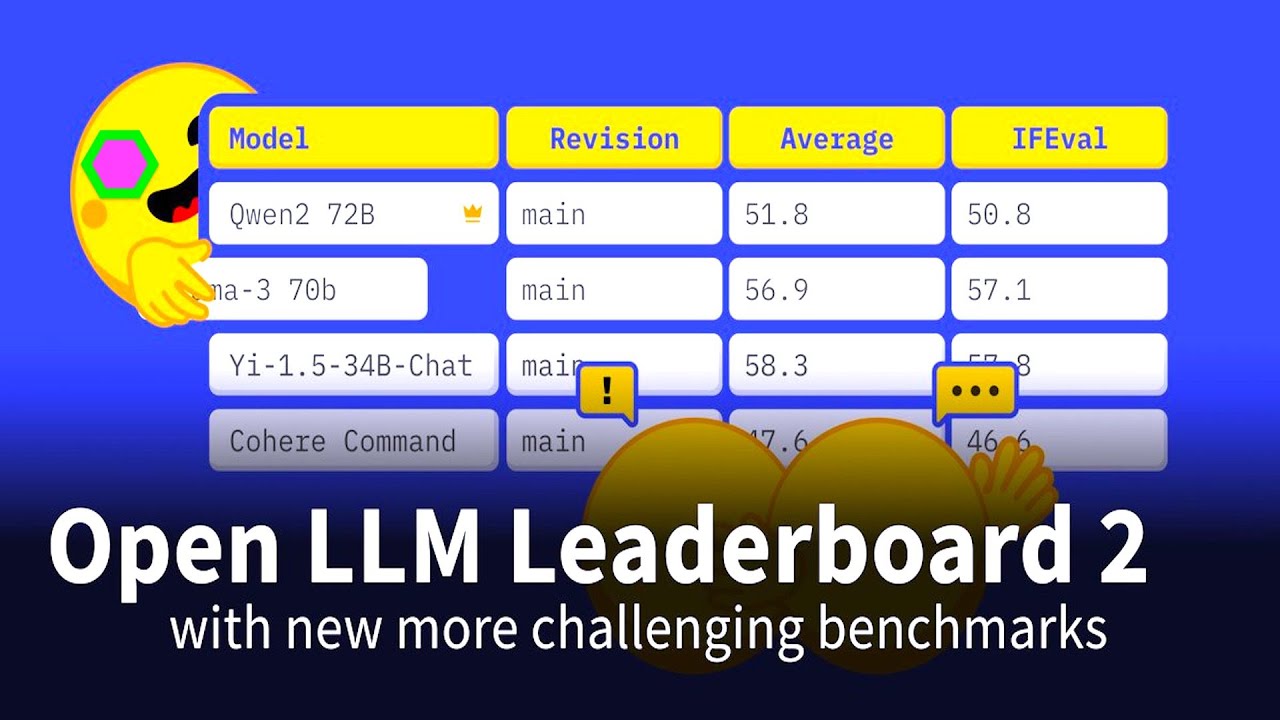

Hugging Face, a key player in open LLM development, has released the Open LLM Leaderboard 2, which aims to rank the performance and capabilities of various open-source LLMs. The leaderboard provides insights into the relative skills of different models by using a diverse set of benchmarks. The new version of the leaderboard includes improvements such as normalized scores, a faster interface, enhanced reproducibility features, and a community voting system. These enhancements ensure that the rankings are more accurate and representative of real-world interactions with the models.

The video highlights the top performers on the Open LLM Leaderboard 2, with models like Qwen 2-72B Instruct and MetaLama 3B-70B Instruct leading the pack. The inclusion of chat templates in the evaluation process may have contributed to the success of instruct models on the leaderboard. The leaderboard allows users to filter models based on various attributes, providing a comprehensive view of their performance. The focus on Chinese open models and the dominance of Transformer-based models in the top spots indicate the rapid progress in benchmarking open LLMs.

The video also delves into the testing methodology behind the leaderboard, emphasizing the importance of running evaluations on the same GPUs to ensure consistency. Hugging Face dedicated significant GPU time to rerun evaluations for major open LLMs, leading to key takeaways such as the superior performance of Qwen 2-72B and the dominance of Transformer-based models in the top 10 spots. The video encourages viewer engagement by inviting feedback on the models included in the leaderboard and the decisions made by Hugging Face. Overall, the Open LLM Leaderboard 2 signifies advancements in benchmarking open LLMs and provides valuable insights for users ranging from power users to beginners looking to leverage these models.