The video highlights OpenAI’s concerns about the potential for misinformation in the upcoming presidential election, referencing a report that analyzes the misuse of its generative AI technology in deceptive social media campaigns. It emphasizes the importance of trust and regulatory measures in combating misinformation, contrasting the business models of generative AI companies with those of traditional social media platforms.

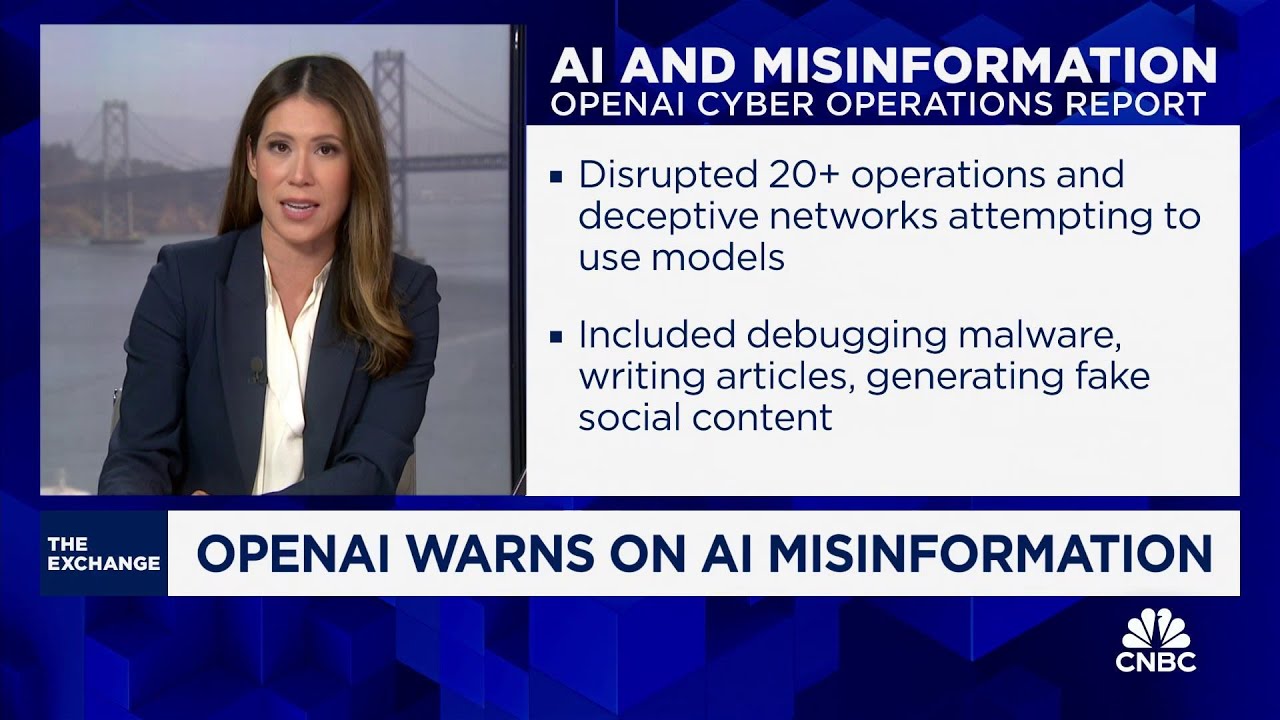

The video discusses the rising concern of misinformation in the context of the upcoming presidential election, particularly with the advent of generative AI technologies. Deidre Bosa highlights a report released by OpenAI that examines how its models have been used in deceptive campaigns on social media. This report, which spans 54 pages, analyzes over 20 covert operations that utilized OpenAI’s technology to spread misinformation, signaling a proactive approach by the company to address potential issues before they escalate.

Bosa draws parallels between the current situation and the challenges faced by social media platforms like Facebook in the past. She references Mark Zuckerberg’s early vision for Facebook as a tool for social connection, which later became marred by misinformation and influence operations. This historical context raises questions about whether generative AI will follow a similar trajectory, with OpenAI attempting to mitigate risks associated with its technology.

The report from OpenAI is part of a broader effort to identify trends in misinformation and its implications for society. Bosa notes that the stakes are higher now, as generative AI can create highly convincing content that may mislead the public. The financial incentives behind misinformation campaigns are also discussed, with the video contrasting the engagement-driven business model of social media with the subscription and licensing model of generative AI companies like OpenAI.

Trust is emphasized as a crucial element for the success of generative AI technologies. Unlike social media platforms that thrive on engagement, OpenAI and similar companies depend on providing accurate information to maintain user trust. This difference in business models may influence how these companies approach the challenge of misinformation and their responsibility in curbing it.

Finally, the video touches on the regulatory landscape surrounding AI and misinformation. While there has been pushback against broader regulations, progress has been made at the state level, particularly in California, where several AI-related bills have been signed into law. These include measures to combat deep fakes, indicating a growing recognition of the need for oversight in the rapidly evolving field of AI. Overall, the video underscores the importance of addressing misinformation in the age of generative AI, especially as the election approaches.