The video discusses OpenAI’s O3 model and the ongoing debate about its classification as Artificial General Intelligence (AGI), highlighting differing opinions from prominent figures in the AI community and the concept of the “jagged frontier,” which illustrates AI’s uneven performance across tasks. The host emphasizes the need for clearer definitions of AGI and better benchmarks to assess AI’s capabilities, while acknowledging significant advancements in AI technology despite its limitations.

The video discusses the recent release of OpenAI’s O3 model and the ongoing debate about whether it qualifies as Artificial General Intelligence (AGI). The host references a previous comment suggesting that the very act of debating AGI indicates its presence. The video highlights various opinions from notable figures in the AI community, including Sam Altman, Elon Musk, and François Chollet, regarding the capabilities of the O3 model and its implications for AGI. The host emphasizes the lack of consensus on what constitutes AGI and the importance of defining it more clearly to facilitate meaningful discussions.

The host shares insights from a poll they conducted, asking whether the O3 model is smarter than the average human, particularly in performing everyday office tasks. The responses reveal a divide in opinions, with some asserting that the model does not exhibit true AGI characteristics, such as the ability to learn from experiences. The video also touches on the concept of the “jagged frontier,” introduced by Ethan Mik, which illustrates how AI can excel in certain tasks while struggling in others, creating a non-linear representation of intelligence.

The jagged frontier concept is further explained, contrasting the smooth progression of human abilities across various tasks with the uneven performance of AI models. The host argues that while AI may outperform humans in complex tasks, it can still fail at seemingly simple ones, leading to debates about its overall intelligence. This uneven performance complicates the assessment of AI’s capabilities and raises questions about how we define intelligence and AGI.

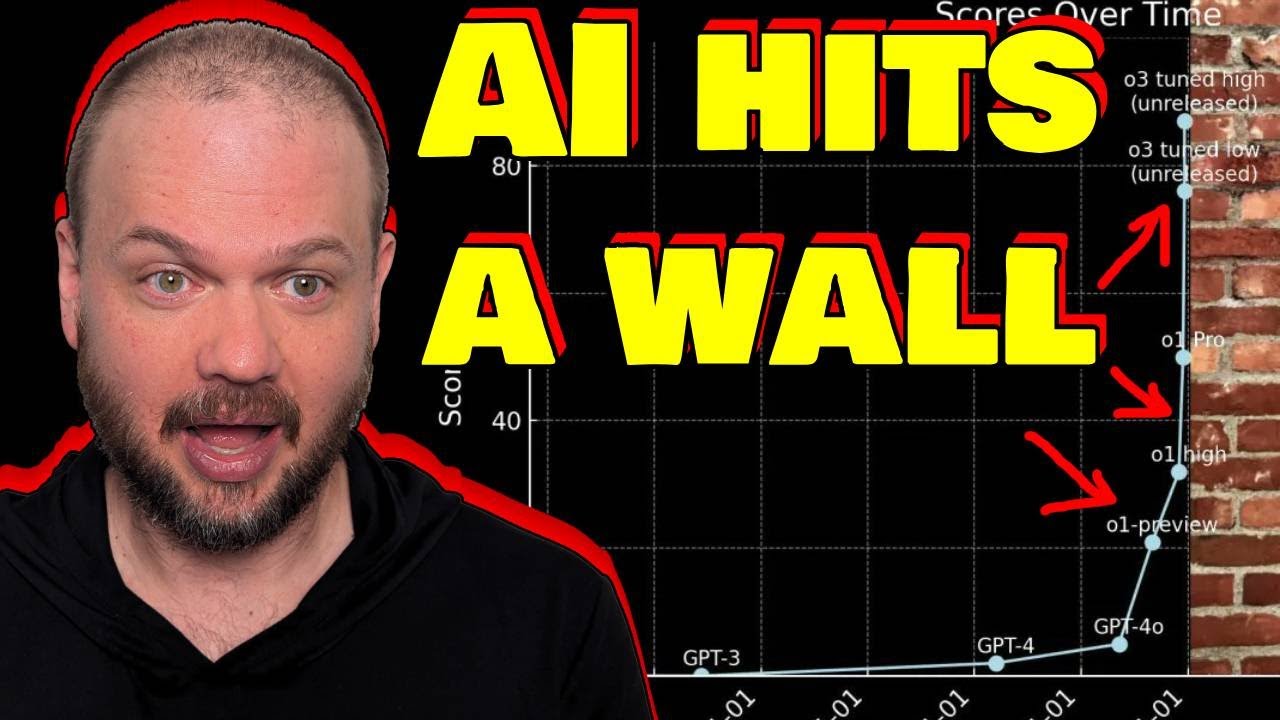

The video also discusses the advancements in AI scaling, particularly the new paradigm of “test time compute,” which allows models to think through problems more thoroughly before answering. This approach has led to significant improvements in the performance of AI models like O3. The host highlights that despite claims of a slowdown in AI progress, recent developments suggest that advancements are still occurring rapidly, with O3 demonstrating impressive results in various benchmarks.

In conclusion, the host encourages viewers to consider the implications of AI’s jagged performance and the ongoing debates surrounding AGI. They emphasize the need for better benchmarks to assess AI’s progress and the importance of recognizing the significant advancements being made, even if certain limitations persist. The video invites viewers to reflect on whether an AI that excels in many areas but struggles with basic tasks can still be considered intelligent or even AGI, fostering a deeper conversation about the future of AI and its capabilities.