The video critically reviews Opus 4.6 and Codex 5.3, highlighting their persistent shortcomings such as basic coding mistakes, narrow problem-solving, and the need for significant human oversight. Despite minor improvements, the speaker argues that these AI models are still unreliable for fully automating programming tasks, reaffirming the ongoing importance of skilled human developers.

The video is a critical review of the latest AI coding models, Opus 4.6 and Codex 5.3. The speaker expresses disappointment with Opus 4.6, describing it as an underwhelming update that feels almost identical to its predecessor, Opus 4.5, aside from some changes in instructions. The main complaint is that the model still makes basic mistakes, consumes a lot of tokens, and tends to approach problems in a narrow, local way. For example, it avoids making new tool calls, opening other files, or even scrolling to the top of a file to add necessary imports, which leads to sloppy and incomplete code.

The speaker references broader community sentiment, noting that others have also found Opus 4.6 to be only marginally improved—faster and a bit smarter, but still prone to poor decisions. Codex 5.3 is acknowledged as a slightly better engineer, but both models are described as “clankers” that make daily mistakes. This persistent unreliability is cited as a reason why these models cannot yet be trusted to fully automate programming jobs, as they still require significant human oversight to catch and correct errors.

A significant portion of the video is devoted to discussing a post by Greg Brockman, president and co-founder of OpenAI. Brockman acknowledges that managing AI-generated code at scale is an emerging problem that will require new processes and conventions to maintain code quality. The speaker finds this admission both honest and alarming, interpreting it as an acknowledgment that AI-generated code is inherently “sloppy” and that human intervention will remain necessary for the foreseeable future. Brockman’s advice to ensure a human is accountable for any code merged into production is seen as stating the obvious, but also as an implicit admission of the models’ limitations.

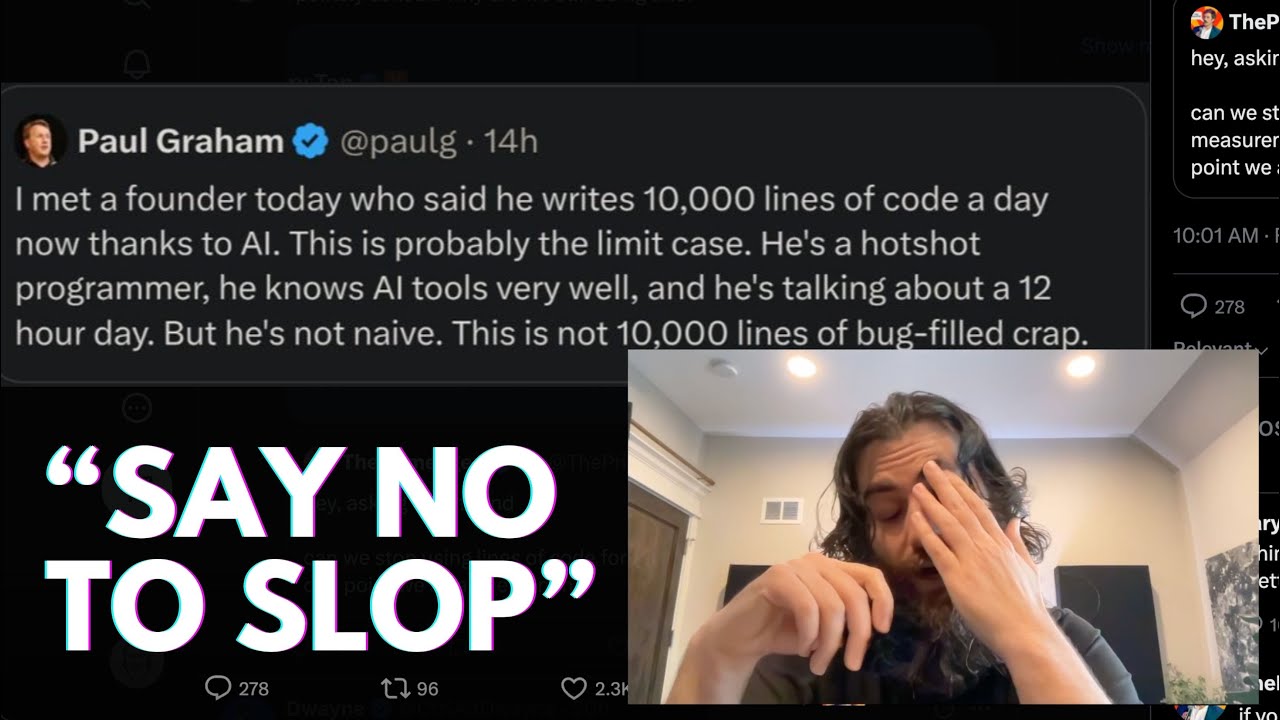

The video also pokes fun at prominent Silicon Valley figures like Gary Tan and Paul Graham, who have made bold claims about the productivity gains from AI coding tools. The speaker is skeptical of claims such as writing 10,000 lines of code per day with AI assistance, arguing that such output is likely to be low-quality “bug-filled crap.” The speaker also references reports of engineers experiencing burnout and health issues from trying to keep up with the demands of managing AI-generated code, describing the current environment as a “mass psychosis event.”

In conclusion, the speaker reassures viewers that skilled human programmers remain indispensable, as current AI models like Opus 4.6 and Codex 5.3 are far from replacing them. The video ends on a somewhat optimistic note for developers, suggesting that the value of quality human-written code is secure for the foreseeable future, given the persistent shortcomings and “slop” produced by even the latest AI coding models.