The paper “ORPO: Monolithic Preference Optimization without Reference Model” by KAIST AI researchers introduces a method called ORPO that combines supervised fine-tuning and alignment into a single step to improve language model alignment with human preferences. By incorporating an odds ratio loss in the joint loss function, ORPO aims to make desired outputs more likely and undesired outputs less likely, resulting in more efficient and effective optimization without the need for intermediate models or complex training procedures.

The paper titled “ORPO: Monolithic Preference Optimization without Reference Model” by researchers at KAIST AI focuses on the alignment of language models and instruction-tuned models through a process called monolithic preference optimization. Alignment in this context refers to making model outputs more consistent with human expectations and preferences. Traditional supervised fine-tuning involves training models with labeled data pairs of instructions and appropriate answers. However, the paper introduces an alignment procedure that aims to make desired outputs more likely and undesired outputs less likely, improving the overall model performance.

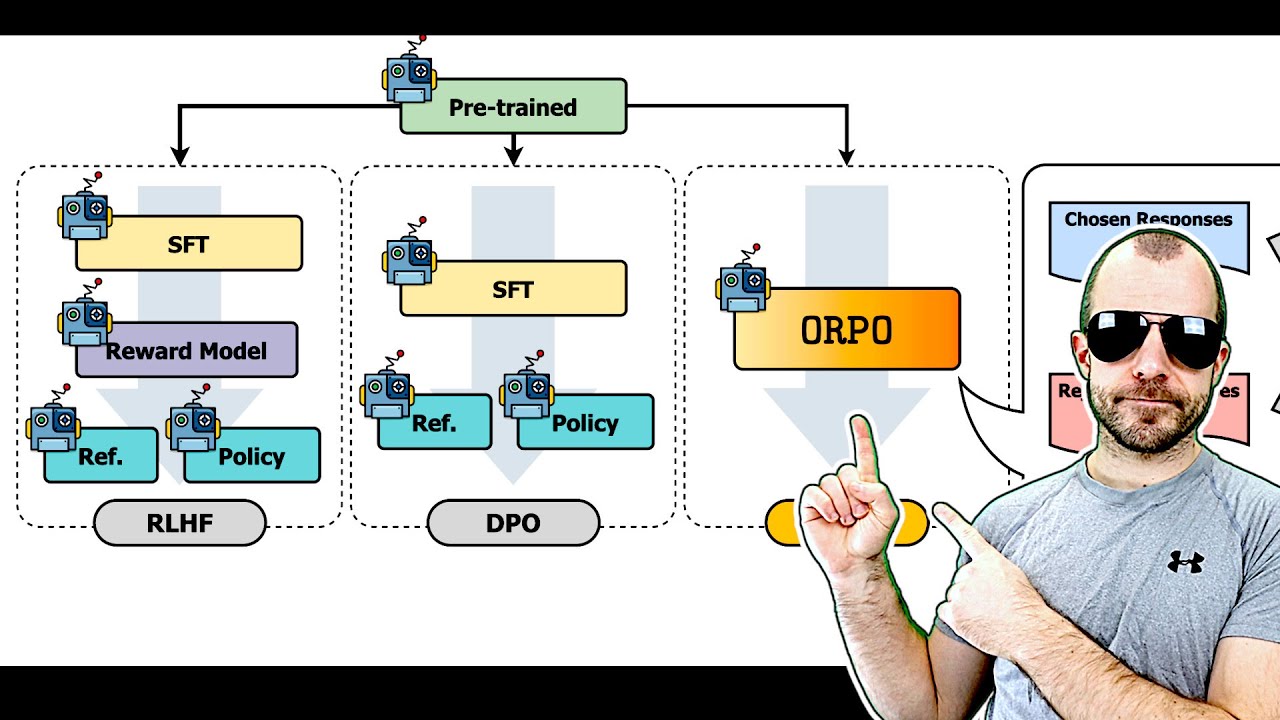

The researchers propose a method called ORPO, which integrates supervised fine-tuning and alignment into a single step, eliminating the need for multiple sequential processes. ORPO combines the supervised fine-tuning loss with an odds ratio loss, which focuses on adjusting the likelihoods of winning and losing responses based on user preferences. By incorporating this joint loss function, ORPO aims to enhance model alignment without the need for intermediate models or complex training procedures, resulting in more efficient and effective optimization.

Through a detailed analysis of the proposed method, the researchers highlight the importance of the odds ratio loss in guiding model training towards preferred outputs. By considering the differences in likelihoods between winning and losing responses, the odds ratio loss helps the model prioritize desired outputs while minimizing undesired ones. This approach offers a more balanced and effective way to optimize model preferences compared to traditional probability ratio methods, which tend to produce spiky and less stable results.

Experimental results presented in the paper demonstrate the effectiveness of ORPO in improving model performance and alignment compared to conventional approaches. Despite not yielding substantial gains, the consistent improvements observed across various benchmarks indicate the method’s potential benefits. By streamlining the optimization process and integrating alignment directly into training, ORPO offers a promising solution for enhancing model capabilities and aligning outputs with user preferences. Overall, the paper contributes valuable insights into monolithic preference optimization without the need for reference models, offering a novel approach to improving language model alignment.