The video explains a paper that reveals a method for extracting sensitive fine-tuning data from pretrained models like BERT and Vision Transformers through a black-box attack that involves creating a “backdoor” in the model during its training process. This technique raises significant privacy concerns, as attackers can manipulate model weights to capture specific training examples, highlighting the need for increased awareness and research into the security of machine learning models.

The video discusses a paper titled “Privacy Backdoors: Stealing Data with Corrupted Pretrained Models” by Shan L. Fun and Florian Traumer from ETH Zurich. The authors introduce a method to extract fine-tuning data from models that were not intended to share such information. The technique is demonstrated using popular models like BERT and Vision Transformers, showcasing not only the theoretical concept but also a practical implementation. Although the method may not be fully ready for real-world application due to various limitations, it raises significant concerns about privacy and data security in machine learning models.

The setup involves a scenario where a user fine-tunes a model, such as BERT, with sensitive data to create a classifier for detecting personally identifiable information (PII). The user might either upload the fine-tuned model back to a platform like Hugging Face or deploy it through an API. However, the paper reveals that even if the model is kept private behind an API, it is possible for an attacker to extract the fine-tuning data through a black-box attack, which does not rely on exploiting security vulnerabilities but rather on manipulating the model’s training process.

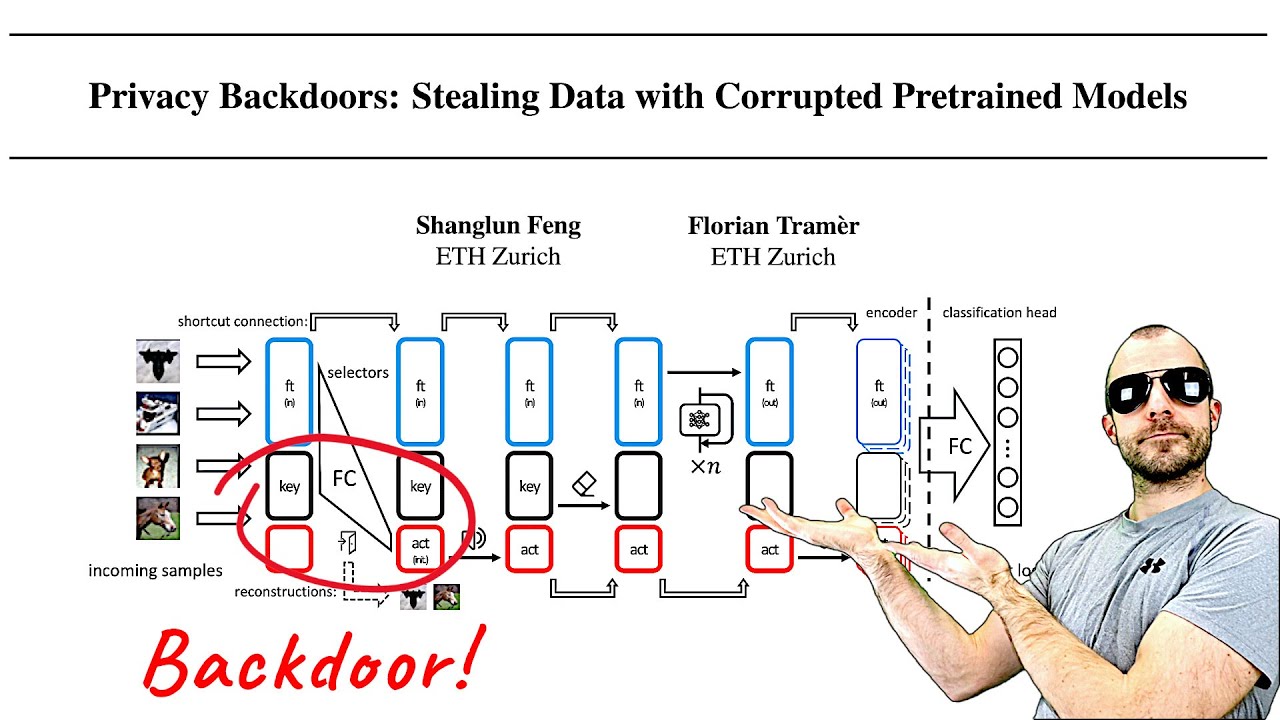

The attackers can modify a pre-trained model’s weights to create a “backdoor” that captures specific training examples. The technique involves adding a randomly initialized layer to the model and fine-tuning it with the victim’s data. As the victim trains the model, the backdoor imprints the specific data points in the model’s weights. This allows the attacker to later reconstruct the exact data points used for training by analyzing the final weights of the fine-tuned model, demonstrating a risk of data leakage even when models are thought to be secure.

The paper further explains how the attackers ensure that the model retains the imprinted data while preventing further updates to those parameters. They achieve this by manipulating the output of certain layers to remain negative, thus halting any future gradient updates that would alter the imprinted data. This “latch” mechanism is critical for preventing multiple data points from being captured by the same backdoor, thereby preserving the integrity of the attack.

Finally, the authors extend their findings to larger models like transformers, which introduce additional complexities. They describe a method for coordinating multiple backdoors within the same model to capture entire sequences of data rather than isolated tokens. Despite the challenges posed by model normalization techniques and varying input types, the approach demonstrates the potential to effectively extract sensitive training data, highlighting a critical area of concern for privacy in machine learning applications. The video concludes with a call for further research into the security of machine learning models and awareness of these emerging threats.