The video discusses a research paper on Scalable MatMul-free Language Modeling, proposing efficient computational methods to replace matrix multiplication operations in large language models. The paper introduces tary weights to simplify operations, achieving competitive performance with state-of-the-art Transformers while eliminating the need for expensive matrix operations, highlighting potential hardware efficiency and scalability benefits.

The video discusses a research paper on Scalable MatMul-free Language Modeling by researchers from UC Santa Cruz, UC Davis, and Loxy Tech. The paper proposes replacing matrix multiplication operations in large language models with more efficient computational methods. Specifically, feed-forward layers in Transformers are replaced with tary accumulators, while the attention part is replaced with a parallelizable form of a tary recurrent network. The goal is to develop large language models that are matrix multiplication-free, making them more hardware-efficient. The paper draws inspiration from previous works like bitnet and RWKV to create models that show promise in terms of efficiency and performance.

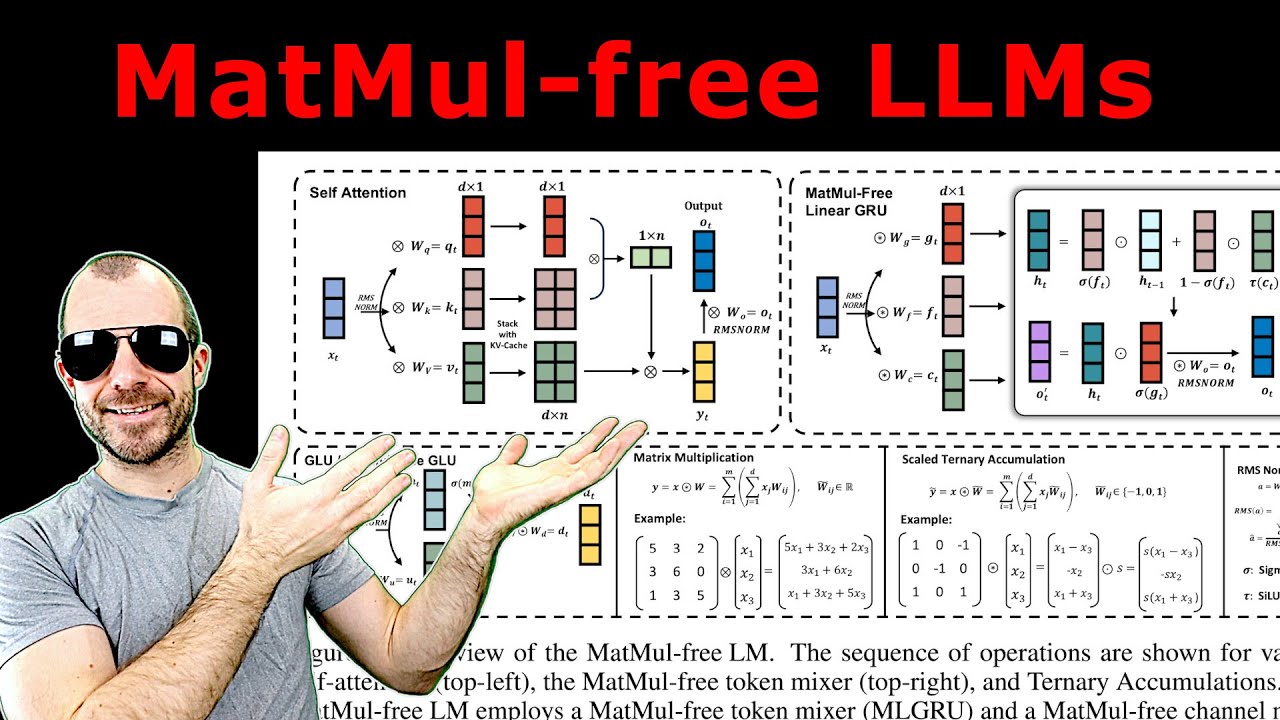

Matrix multiplication is a core operation in neural networks like Transformers, and the paper explores the use of tary weights to replace traditional matrix multiplication. Tary weights restrict values to -1, 0, or 1, simplifying the operations to a selection process rather than complex multiplications. This approach aims to reduce the need for expensive floating-point operations, potentially improving hardware efficiency. However, there are concerns about the actual hardware implementation of tary operations and whether the theoretical gains will translate effectively in practice.

The paper introduces a modified architecture for language modeling, which replaces matrix multiplications with tary operations in feed-forward layers. It also linearizes the recurrent neural network architecture to facilitate parallelization and scalability, replacing matrix multiplications with tary operations. The paper discusses the use of the General Linear Unit (GLU) and other techniques inspired by modern recurrent networks to achieve MatMul-free language modeling. The authors highlight the need for unique learning strategies and the use of the straight-through estimator to train effectively with tary weights.

Experimental results show that the MatMul-free models achieve performance on par with state-of-the-art Transformers while eliminating the need for matrix operations. The scalability and hardware efficiency of these models are promising, with potential benefits for edge devices and reduced resource consumption. However, there are concerns about the long-term performance and capabilities of MatMul-free models compared to traditional Transformers. The paper also explores the use of FPGA accelerators to optimize hardware for tary operations, indicating the potential for significant hardware advancements in the future.

In conclusion, the paper presents an innovative approach to developing MatMul-free language models that show promise in terms of efficiency and performance. While the models achieve competitive results and offer hardware advantages, there are lingering questions about the trade-offs made in simplifying operations and the long-term viability of MatMul-free architectures. The research opens up avenues for exploring alternative computational methods in neural networks and underscores the importance of hardware optimization for maximizing the benefits of such innovations.