The text discusses various advancements in artificial intelligence, including AI-enabled robots with visual reasoning abilities, training robots in simulations, and the capabilities of AI agents like Dr. Eureka and Devon. It also touches on the departure of senior OpenAI executives, legal implications of AI systems training on copyrighted material, and the introduction of AI glasses integrated with an AI assistant named Noah for real-time information and task assistance.

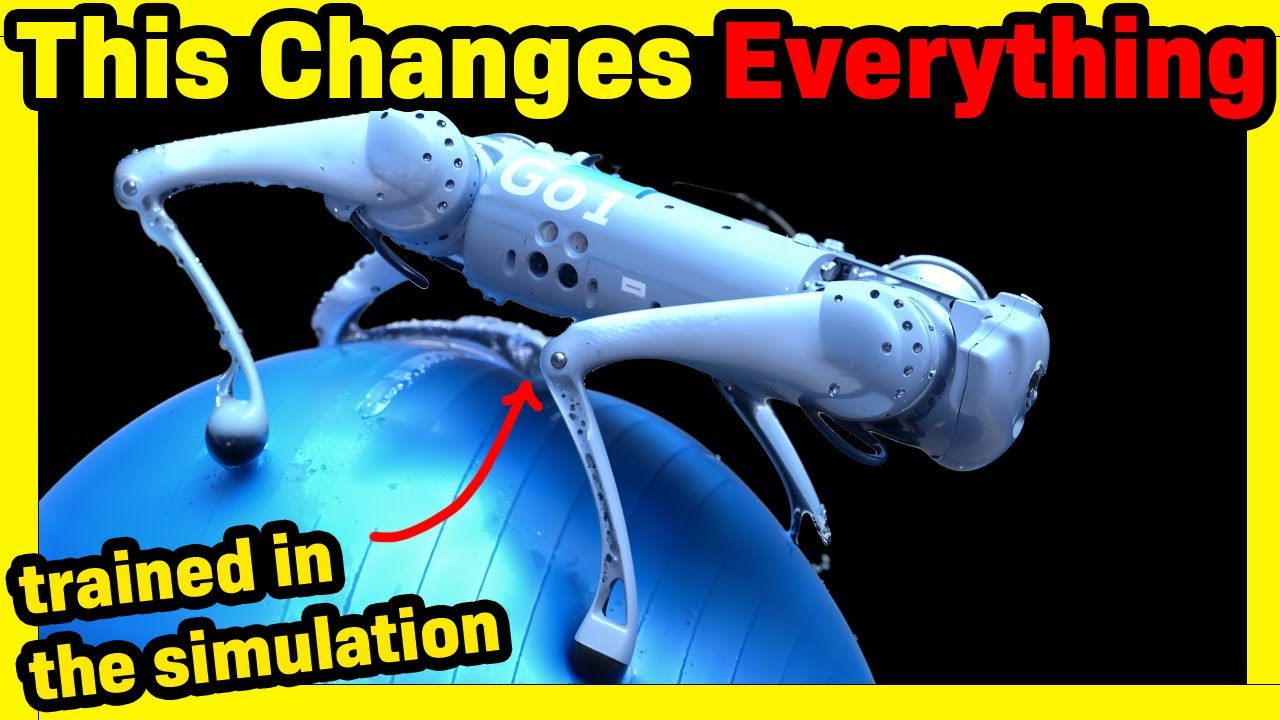

In the video transcription, various advancements and developments in the field of artificial intelligence are discussed. Figure AI, a company working on AI-enabled robots, showcased their latest AI technology during a segment on 60 Minutes. They demonstrated the robot’s ability to use visual reasoning to distinguish between objects, such as identifying an orange as a healthy option when asked. Additionally, advancements in training robots in simulations, such as teaching a robot dog to balance on a yoga ball, were highlighted. Dr. Eureka, an AI agent, automates the process of training robot skills in simulations and transferring them to the real world, showing promising results in tasks like balancing on unstable surfaces.

Moreover, the departure of two senior OpenAI executives, Diane Yun and Chris Clark, raised questions about the company’s leadership. Despite their long tenure and the company’s significant growth, the reasons for their departure remain undisclosed. The text also touches upon the legal implications of AI systems training on copyrighted material and proposes a framework to compensate copyright owners proportionately based on their contributions to AI-generated content. A paper discussing training language models for multi-token prediction is mentioned, highlighting potential efficiency gains and improvements in algorithmic reasoning capabilities.

Furthermore, the text delves into the capabilities of Devon, an AI agent, showcasing tasks it was asked to perform, such as creating a website for playing chess against an AI model and visualizing Antarctica sea temperatures data. Devon’s ability to handle diverse tasks and deploy functional applications was demonstrated, despite encountering some bugs along the way. The potential impact of wearable devices like the Frame AI glasses, designed for developers and incorporating AI and augmented reality features, was also discussed. The glasses aim to provide an open-source, affordable, and capable platform for various AI applications and tasks.

Lastly, the video transcription introduces Frame, an AI glasses device integrated with an AI assistant named Noah. Noah can provide real-time information, generate images, and assist users in various tasks. The transcription emphasizes the device’s capabilities, showcasing its ability to answer questions, provide weather information, and generate images based on user prompts. Frame is set to enter production and fulfillment soon, offering users a glimpse into the future of AI-enhanced wearable technology. This summary encapsulates the key advancements and discussions regarding AI technology and its applications discussed in the video transcription.