The video reviews Intel’s new ARC Pro B60 GPU, highlighting its competitive hardware features, affordability, and potential to disrupt Nvidia’s dominance in AI and professional markets, though current software support and ecosystem maturity remain limitations. While the B60 offers promising performance and flexibility, Nvidia still leads in AI workload efficiency and compatibility, making it the more practical choice for now.

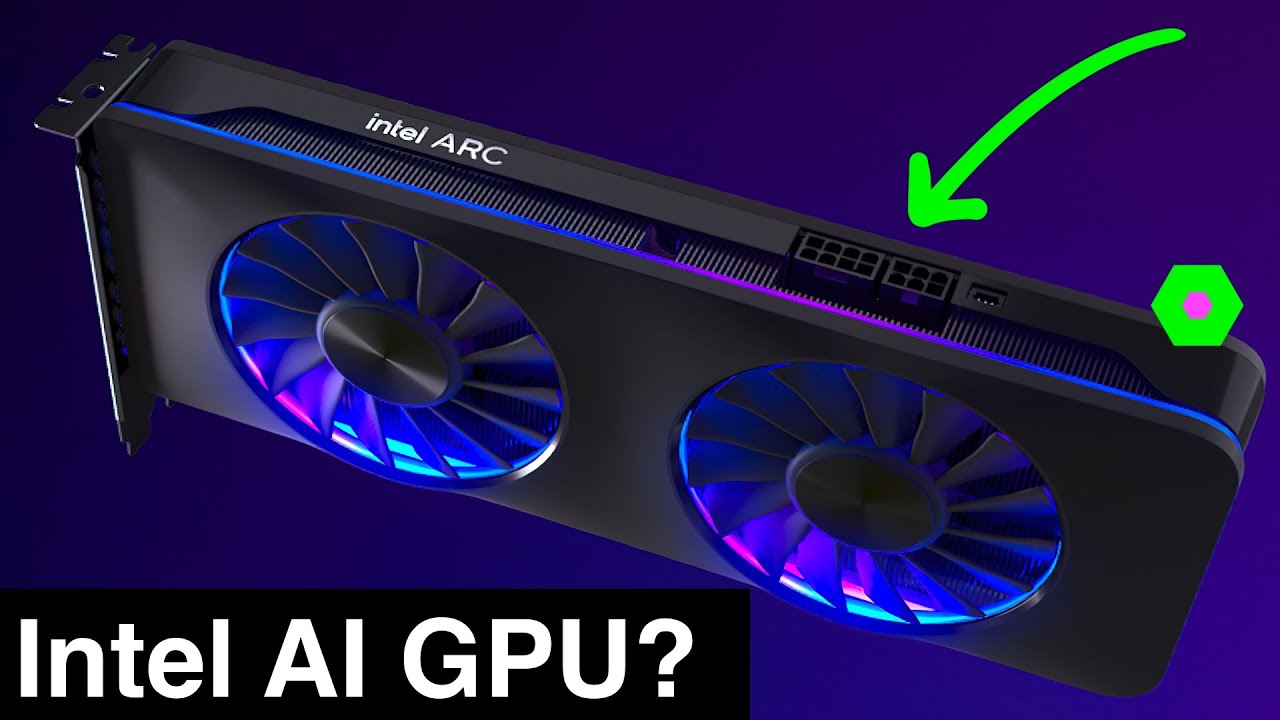

The video discusses Intel’s recent announcement at Computex 2025 of their new ARC Pro B60 GPU, which aims to challenge Nvidia’s dominance in the AI and professional GPU markets. Intel’s move marks a significant shift in the industry, as Nvidia has long held a near-monopoly with high-priced GPUs that dominate AI training and inference tasks. Intel’s new GPUs, including the B50 and B60 models, are designed to offer more affordable options for local AI deployment, potentially disrupting Nvidia’s pricing and market control.

Intel’s ARC Pro GPUs are focused on professional and server markets, with the B60 featuring 24GB of VRAM and promising performance roughly comparable to Nvidia’s RTX 3090 or 3090 Ti. The B50, with 16GB of VRAM, is priced around $300, while the B60 is expected to be under $1,000. Despite these attractive price points, the current software ecosystem and tooling support for Intel’s GPUs are still developing, making them less immediately compatible with popular AI frameworks like PyTorch, which are optimized for Nvidia’s CUDA platform.

Performance-wise, the Intel ARC Pro B60 is roughly on par with high-end Nvidia GPUs like the 3090, but at a lower power draw and with more flexible hardware options, such as dual-GPU configurations and various form factors. However, in terms of raw AI token throughput, the B60 currently lags behind Nvidia’s offerings, performing only slightly better than older or lower-tier GPUs like the RTX 560 Ti. This indicates that Intel still has a significant way to go in catching up with Nvidia’s mature ecosystem and performance benchmarks for AI workloads.

Intel is positioning these GPUs as part of their broader “Project Battle Matrix,” which aims to enable flexible multi-GPU configurations and high-bandwidth communication via PCIe 5.0. They emphasize the openness of their platform, allowing board partners to create diverse and innovative GPU designs, including fanless and multi-GPU setups. The company also highlights their focus on efficiency, with low TDPs and the potential for stacking VRAM across multiple GPUs, which could be advantageous for large-scale AI deployments.

Despite the promising hardware features and competitive pricing, the current state of Intel’s AI tooling and ecosystem support remains a major hurdle. The performance of the ARC Pro B60 is still behind Nvidia’s GPUs, and the software support for AI training and inference is not yet mature. The speaker concludes that, for now, Nvidia remains the more practical choice for most users due to better compatibility and proven performance, but Intel’s efforts could lead to more competition and innovation in the future. The audience is encouraged to consider their options and stay tuned for further developments.