Lecture 6 of Stanford’s CME295 course explores enhancing large language models’ reasoning abilities through techniques like chain of thought prompting and reinforcement learning with verifiable rewards, introducing the GRPO algorithm for effective training. It also discusses evaluation benchmarks, practical training considerations, and methods such as distillation to improve and scale reasoning capabilities in LLMs.

In Lecture 6 of Stanford’s CME295 course on Transformers and Large Language Models (LLMs), the focus is on LLM reasoning, a rapidly evolving topic that builds on previous lectures covering pre-training, fine-tuning, and preference tuning of language models. The lecture begins by revisiting these foundational steps: pre-training teaches the model language and code structure through large-scale next-token prediction; fine-tuning adapts the model for specific tasks like answering questions using high-quality supervised data; and preference tuning aligns the model with human preferences via reinforcement learning (RL), particularly using Proximal Policy Optimization (PPO) variants. This background sets the stage for exploring how reasoning capabilities can be integrated into LLMs.

The lecture defines reasoning as the ability to solve problems, especially those requiring multi-step processes such as math or coding problems, contrasting them with straightforward knowledge retrieval questions. To enhance reasoning, the concept of “chain of thought” prompting is introduced, where the model is encouraged to generate intermediate reasoning steps before producing a final answer. This approach leverages the model’s next-token prediction training by decomposing complex problems into simpler subproblems, effectively increasing the model’s computational budget and improving problem-solving accuracy. The recent surge in reasoning models, starting with OpenAI’s 2024 releases and followed by other labs, highlights the novelty and importance of this research area.

Benchmarks for evaluating reasoning models focus on coding and math problem-solving, using datasets like HumanEval, Codeforces, and AIM (a math Olympiad exam). A key metric discussed is “pass at K,” which estimates the probability that at least one out of K generated attempts solves the problem correctly. This metric accounts for the diversity of generated solutions, influenced by parameters like temperature, balancing between solution quality and variety. The lecture also explains how to statistically estimate pass at K from multiple samples, emphasizing the importance of generating diverse yet accurate responses to maximize success probability.

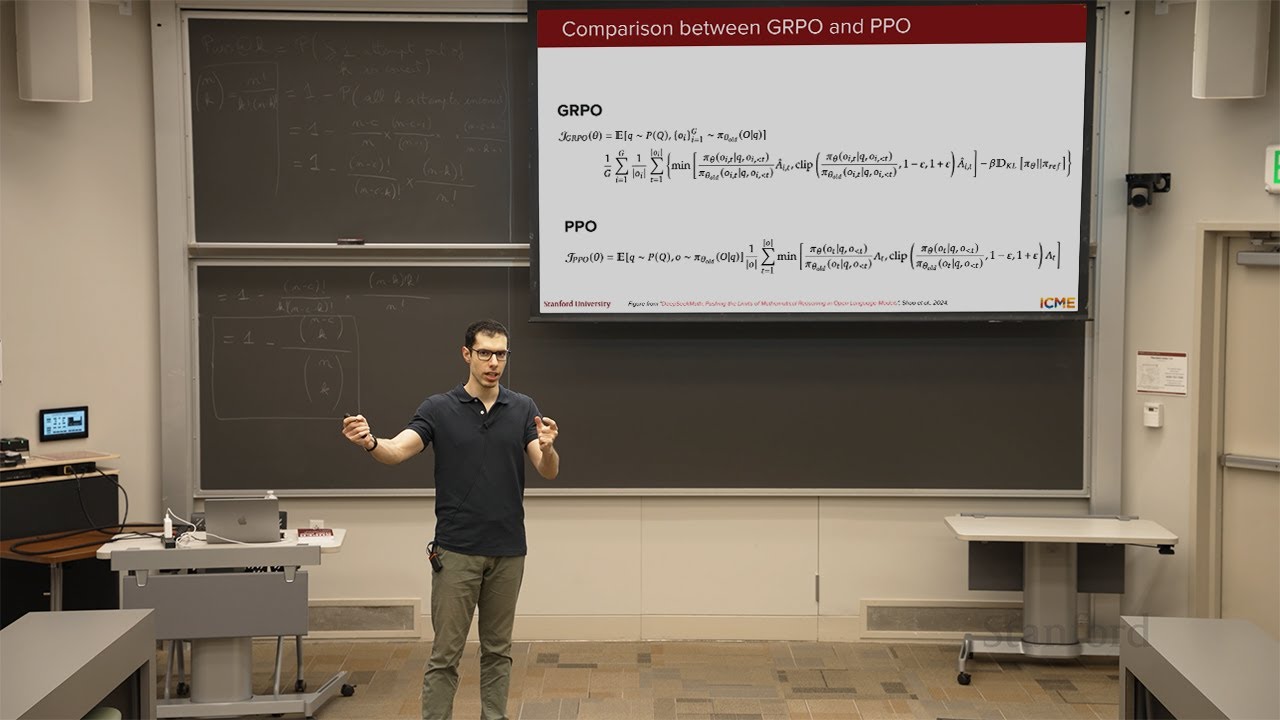

The core technical challenge addressed is how to train reasoning models effectively at scale. Supervised fine-tuning with human-written reasoning chains is difficult due to data scarcity and potential mismatch between human and model reasoning. Instead, reinforcement learning is proposed, using verifiable rewards based on whether the model produces a reasoning chain and whether the final solution is correct. The GRPO (Group Relative Policy Optimization) algorithm is introduced as a preferred RL method for reasoning tasks, differing from PPO by computing advantages relative to a group of completions rather than relying on a value function. This approach simplifies training and better suits the language modeling context.

Finally, the lecture covers practical considerations and recent advances in reasoning model training, including controlling reasoning length to manage computational cost and user pricing, and addressing issues like language consistency in generated reasoning chains. The DeepSeek R1 model is presented as a case study, illustrating a multi-stage training pipeline combining supervised fine-tuning, RL with verifiable rewards, and data filtering via rejection sampling to produce a high-quality reasoning model. The lecture concludes with a discussion on distillation techniques to transfer reasoning capabilities from large models to smaller ones efficiently, highlighting the ongoing research and engineering efforts to make reasoning LLMs more accessible and effective.