The final lecture of Stanford’s CS231N course highlights the evolution of computer vision from biological origins to deep learning breakthroughs, emphasizing a human-centered approach that leverages AI to augment human abilities, address real-world challenges like healthcare labor shortages, and respect privacy and ethical considerations. It also explores the integration of AI with robotics and brain-computer interfaces to create systems that align with human values and needs, advocating for interdisciplinary collaboration to ensure AI serves as a beneficial augmentation tool for society.

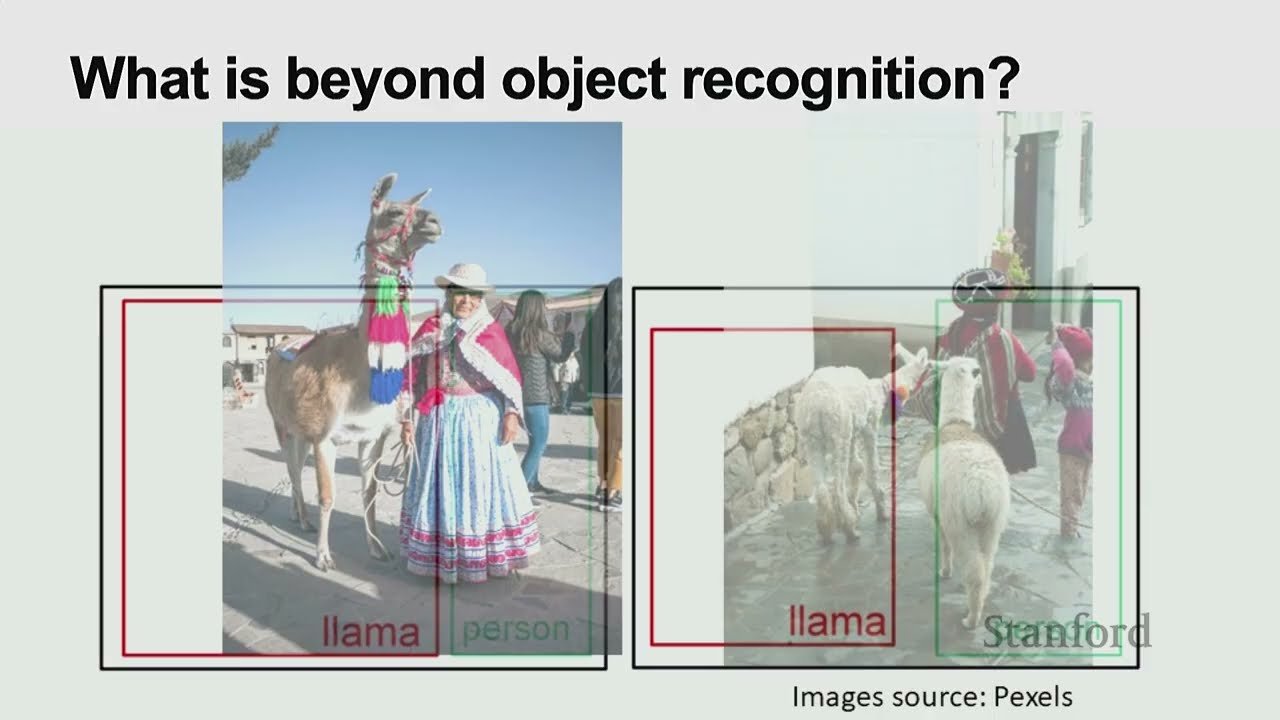

The final lecture of Stanford’s CS231N course offers a reflective and forward-looking perspective on AI in computer vision, emphasizing the human-centered approach. It begins by tracing the evolutionary origins of vision, highlighting how the development of photosensitive cells in ancient animals sparked an evolutionary arms race, leading to the Cambrian explosion and establishing vision as a primary sensory system. This biological foundation inspired early computer vision efforts, which initially aimed to replicate human visual capabilities, particularly object recognition. The lecture reviews the historical progression from psychology-inspired part-based models to statistical machine learning approaches, culminating in the deep learning revolution sparked by the ImageNet dataset and convolutional neural networks around 2012. This breakthrough enabled machines to recognize objects with unprecedented accuracy, although challenges remain in understanding complex scenes and relationships between objects.

Moving beyond replicating human vision, the lecture explores AI’s potential to see what humans cannot, such as fine-grained object categorization that surpasses typical human expertise. Examples include recognizing thousands of bird species or car models, which humans generally cannot do reliably. AI’s ability to analyze large-scale visual data has also been applied to social studies, revealing correlations between car types and socioeconomic factors across cities. The lecture also discusses human limitations like attention constraints and visual biases, underscoring the importance of addressing AI bias to prevent unfair outcomes. Additionally, it touches on privacy concerns, presenting innovative solutions like specialized lenses combined with software to recognize human activities without compromising individual privacy.

The third section focuses on building AI that aligns with human needs and values, particularly in augmenting labor rather than replacing it. The lecture highlights the growing labor shortages in healthcare and eldercare, where AI and smart sensors can provide critical support. Examples include AI systems that monitor hand hygiene in hospitals to reduce infections, track patient movements in ICUs to aid recovery, and assist seniors in aging independently at home. These applications demonstrate AI’s potential to fill “dark spaces” in healthcare where human attention is limited, enhancing safety and efficiency. The discussion emphasizes the importance of designing AI systems that respect human values and address real-world challenges, rather than merely pursuing technological advancement.

Robotics is presented as a key frontier in connecting perception with action, enabling AI to not only see but also interact with the physical world. Despite significant progress, current robots remain slow and limited to constrained tasks. The lecture showcases research integrating large language models and visual language models to enable robots to understand open-ended instructions and perform diverse household activities in realistic simulated environments. A human-centered survey informed the selection of tasks that people actually want robots to assist with, such as cleaning and cooking, rather than less desired tasks. This approach ensures that robotic development aligns with human preferences and societal needs. The lecture also highlights ongoing challenges in robotic learning, including the need for better benchmarks and more generalizable capabilities.

In conclusion, the lecture underscores the intimate relationship between AI, cognitive science, and neuroscience, advocating for a human-centered AI that augments human abilities and respects human values. It presents exciting advances such as brain-computer interfaces that allow users to control robots through thought, illustrating the potential for AI to assist severely paralyzed patients. The overarching message is that AI should be developed not just to perform tasks autonomously but to enhance human life, addressing labor shortages, healthcare challenges, and privacy concerns. This human-centered perspective calls for continued interdisciplinary collaboration and ethical vigilance to ensure AI serves as a powerful augmentation tool for humanity’s benefit.