Colin McIll presents Pol.is, an open-source platform that uses classical algorithms like PCA and K-means to analyze large-scale opinion data gathered through participant voting, enabling interpretable clustering of worldviews for applications in governance and social research worldwide. The talk highlights Pol.is’s robustness, challenges with adversarial manipulation, and the potential integration of large language models and decentralized identity to enhance summarization, moderation, and vote prediction while maintaining transparency and democratic engagement.

In this talk, Colin McIll, the creator of the open-source platform Pol.is, provides an overview of the platform’s background, methodology, and applications. Pol.is is designed as a tool for large-scale opinion gathering and analysis, where participants submit atomic statements and vote on others’ statements by agreeing, disagreeing, or passing. This voting data forms a sparse matrix that is analyzed using classical techniques like PCA and K-means clustering to identify groups of participants with similar worldviews. The platform has been deployed globally, including by governments in the UK, Finland, Singapore, Taiwan, and the Netherlands, and is used both by hobbyists and institutional actors.

A key theme of the discussion is the robustness and interpretability of Pol.is’s methodology. Colin emphasizes that the use of well-understood, classical algorithms like PCA and K-means provides a level of trust and stability, avoiding the unpredictability of deep learning models. However, the system is not immune to adversarial manipulation, such as coordinated voting or synthetic agents designed to skew clusters. Real-world examples, like Uber’s attempt to influence a Taiwanese policy conversation, illustrate both the challenges and the platform’s natural resilience to some naive attacks. The conversation also touches on the potential future integration of zero-knowledge proofs and decentralized identity verification to improve sample authenticity.

The talk explores the role of large language models (LLMs) in enhancing Pol.is, particularly in tasks like summarization, moderation, and vote prediction. While LLMs show promise in generating grounded summaries and predicting participant votes with high accuracy, this capability also introduces risks, such as the creation of synthetic voters or the lazy replacement of human input in social research. The team is actively working on methods to ground LLM outputs in actual data to reduce hallucinations and improve reliability. Additionally, LLMs offer alternative approaches to topic modeling and clustering, complementing traditional statistical methods.

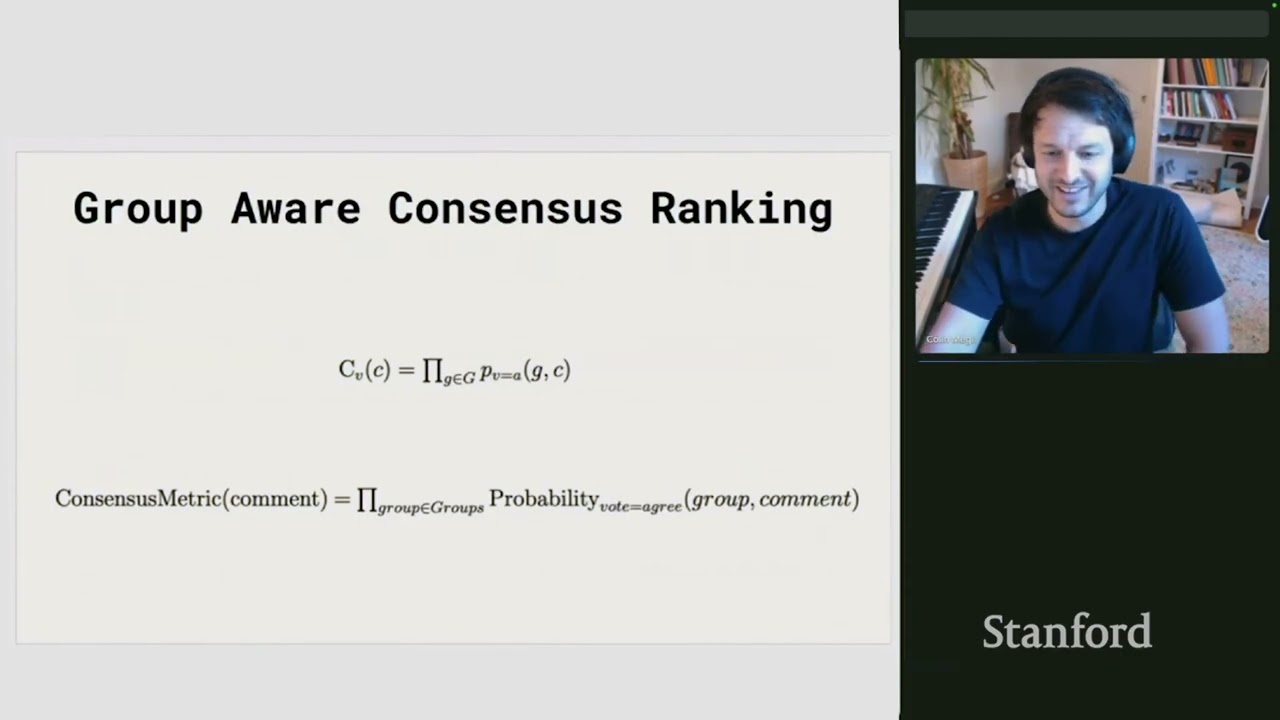

Several case studies highlight Pol.is’s practical applications in diverse contexts, including land use discussions in Washington State, policy innovation units in the UK and Finland, and large-scale participatory surveys conducted by the UN. The platform’s flexibility allows it to be used for emergent surveys where participants shape the conversation by submitting statements, rather than responding to fixed questions. This emergent nature, however, is balanced by the structured, algorithmic clustering of voting data, which provides interpretable groupings of opinions. The discussion also covers the adaptation of Pol.is’s methodology in Twitter’s Community Notes system for misinformation fact-checking, illustrating its broader potential for collective response beyond misinformation.

Finally, the conversation addresses future directions and challenges, including improving clustering algorithms, enhancing vote prediction, and enabling more participatory meta-level engagement with the data. Colin and the discussants consider how participants might contribute to shaping the interpretation of results, potentially through open data access and collaborative analysis. The talk concludes with reflections on the platform’s role in political science and technology, emphasizing the importance of open-source, transparent tools for mapping public views and fostering democratic deliberation at scale. The integration of AI and human preferences in this space presents both exciting opportunities and complex risks that require ongoing research and thoughtful implementation.