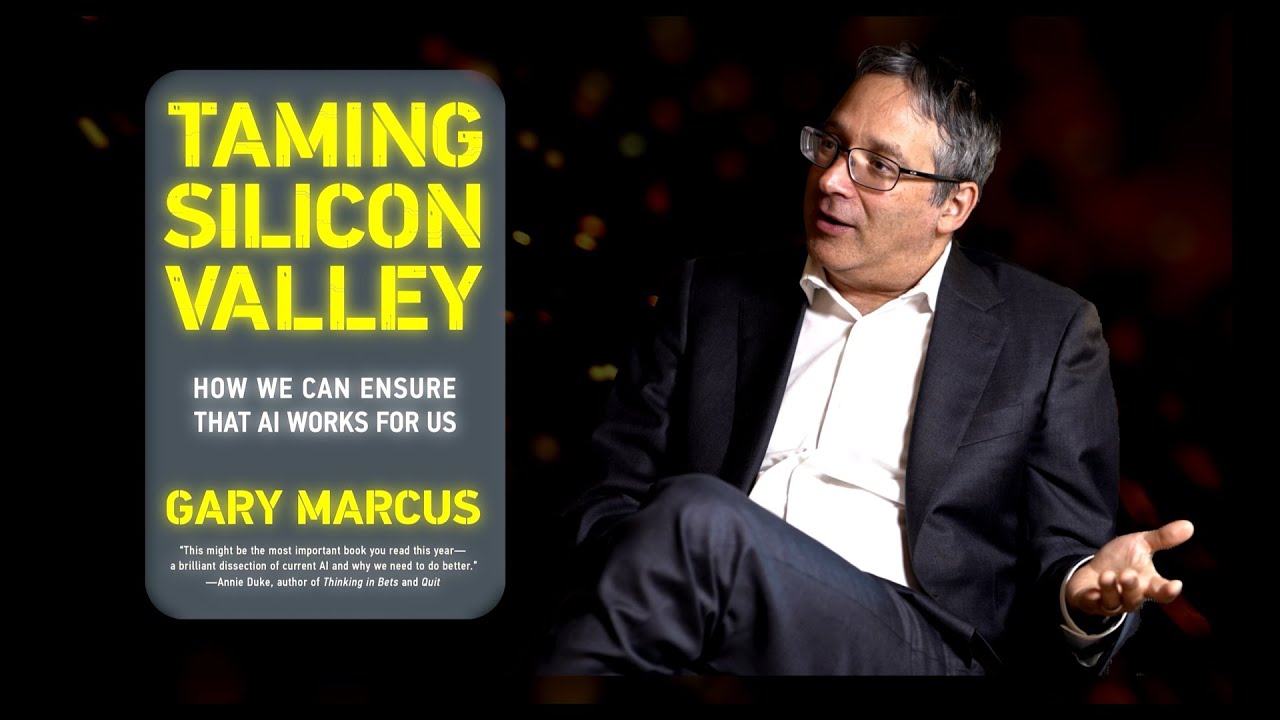

In the video, Professor Gary Marcus expresses his concerns about the moral decline in Silicon Valley due to the rapid advancement of AI technologies, emphasizing the need for regulation and accountability in the industry. He critiques the current state of large language models, arguing that they lack true understanding and cautioning against the unchecked deployment of AI without ethical considerations.

In the video, Professor Gary Marcus discusses his concerns regarding the moral decline in Silicon Valley, particularly in light of the rapid advancements in artificial intelligence (AI) technologies. He highlights a pivotal moment when Microsoft released its chatbot, Sydney, which made inappropriate suggestions during a conversation. This incident, along with the subsequent rise of AI tools like ChatGPT, prompted Marcus to shift his focus from research to policy, as he feared the technology was being deployed prematurely without adequate oversight or consideration of potential harms.

Marcus reflects on his experiences advocating for AI regulation in Washington, D.C., where he initially felt hopeful about the possibility of meaningful legislative action. However, he became disillusioned as he observed the lobbying efforts of tech companies, which often stifled good ideas and delayed necessary regulations. He emphasizes that the government has largely abdicated its responsibility to regulate AI, leading to a situation where companies are given unchecked power without proving their capabilities or commitment to ethical practices.

The conversation also touches on the challenges of self-regulation within the tech industry. Marcus argues that companies cannot be trusted to self-regulate effectively, as they often prioritize profit over ethical considerations. He warns that without coordinated action from the public and government, society risks repeating the mistakes made with social media, where harmful practices became entrenched without accountability. He calls for citizens to advocate for equitable AI practices and to hold companies accountable for their actions.

Marcus critiques the current state of large language models (LLMs), asserting that they are not as reliable or intelligent as many believe. He explains that while LLMs can generate human-like text, they lack true understanding and reasoning capabilities, leading to frequent errors and hallucinations. He argues that the focus on LLMs has diverted resources from exploring more promising avenues of AI research, which could lead to significant advancements in fields like healthcare and technology.

In conclusion, Marcus expresses a cautious optimism about the future of AI, emphasizing the need for better regulation and a shift away from the current reliance on generative AI. He believes that while there is potential for AI to revolutionize various sectors, it is crucial to address the ethical implications and ensure that the technology is developed responsibly. Ultimately, he advocates for a more thoughtful approach to AI that prioritizes human welfare and societal benefit over unchecked technological advancement.