Telegram has announced a policy shift to comply with government requests for user data and will implement AI-driven content moderation to address concerns about illegal activities. This change follows CEO Pavel Durov’s arrest in France and has raised concerns among users about privacy, potential censorship, and the ambiguity of what constitutes “unsafe” content.

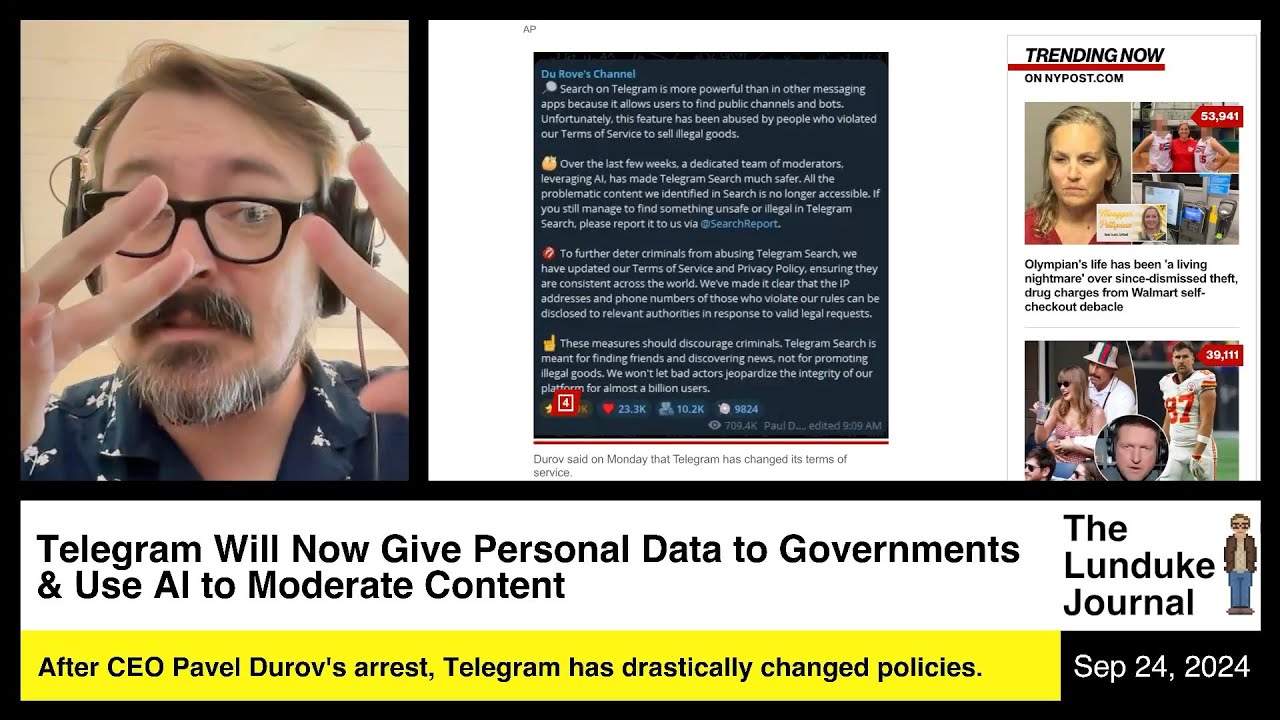

In a significant policy shift, Telegram has announced that it will now comply with government requests for user data, including IP addresses and phone numbers. This change comes in the wake of CEO Pavel Durov’s recent arrest in France, where he was released on bail but remains unable to leave the country. Following this incident, Telegram is responding to pressures from various governments by implementing stricter moderation practices and utilizing artificial intelligence to scan and manage content on the platform. The company aims to address concerns about illegal activities while maintaining the integrity of its user base, which has grown to nearly a billion users.

Durov’s arrest in late August prompted a swift reaction from Telegram, which began to phase out features that could be exploited for illicit purposes. For instance, the “People Nearby” feature, used by a small fraction of users, was removed due to issues with bots and scammers. In a post on social media, Durov highlighted the introduction of new features and the need for moderation to protect the platform’s reputation. He emphasized that while the vast majority of users are law-abiding, a small percentage engaged in criminal activities had tarnished Telegram’s image.

The new moderation policies will involve AI systems that flag content deemed “unsafe,” raising concerns about the subjective nature of this classification. Durov’s statements indicate that the platform will not only target illegal content but also anything that could be considered unsafe, which could encompass a wide range of topics. This vagueness in defining “unsafe” content has led to apprehensions about potential censorship and the implications for free speech on the platform. Users are left questioning what criteria will be used to determine safety and who will ultimately make these decisions.

Furthermore, Telegram’s updated terms of service allow for the disclosure of user data in response to “valid legal requests,” a phrase that could be interpreted in various ways. The ambiguity surrounding what constitutes a valid request raises concerns about the potential for misuse of user data. Critics argue that the rapid changes to the terms of service, which could be seen as unenforceable, may lead to legal challenges from users who feel their rights have been compromised. The platform’s shift towards compliance with government demands marks a departure from its previous stance on user privacy.

As Telegram navigates these changes, the reaction from its user base remains uncertain. While some users may appreciate the efforts to combat illegal activities, others may find the new AI moderation and data-sharing policies off-putting. The potential for increased scrutiny and the loss of anonymity could drive users away from the platform. The coming weeks will be crucial in determining how Telegram’s community responds to these developments, and whether the platform can maintain its user base amid growing concerns over privacy and content moderation.