The video explores test-time active fine-tuning of large language models (LLMs) through a method called transductive active fine-tuning, which allows models to adapt based on relevant data retrieved at test time, enhancing their performance on specific tasks. It introduces a new data selection method called SIFT, which minimizes redundancy and maximizes informative responses, demonstrating significant improvements over traditional retrieval methods and emphasizing the potential for continuous learning in LLMs.

The video discusses the concept of test-time active fine-tuning of large language models (LLMs), focusing on a method called transductive active fine-tuning. The speaker draws an analogy to Google Maps, where zooming in provides more detailed tiles relevant to a specific situation. This approach allows LLMs to retrieve relevant data at test time and fine-tune themselves based on that data, leading to improved performance on specific tasks without the need to solve every possible problem simultaneously. The speaker emphasizes the importance of focusing on the specific problems of interest to enhance efficiency and effectiveness.

The speaker introduces the Pile language modeling benchmark, a large dataset that encompasses various aspects of natural language, including code, math, and general web content. The benchmark aims to evaluate language models on their ability to predict the next token in a sequence. Recent advancements show that learning at test time can significantly outperform previous state-of-the-art models, even with much smaller models. The speaker explains the concept of learning at test time, which involves using the test instance to adapt the model specifically for that instance, contrasting it with traditional machine learning practices that separate training and testing phases.

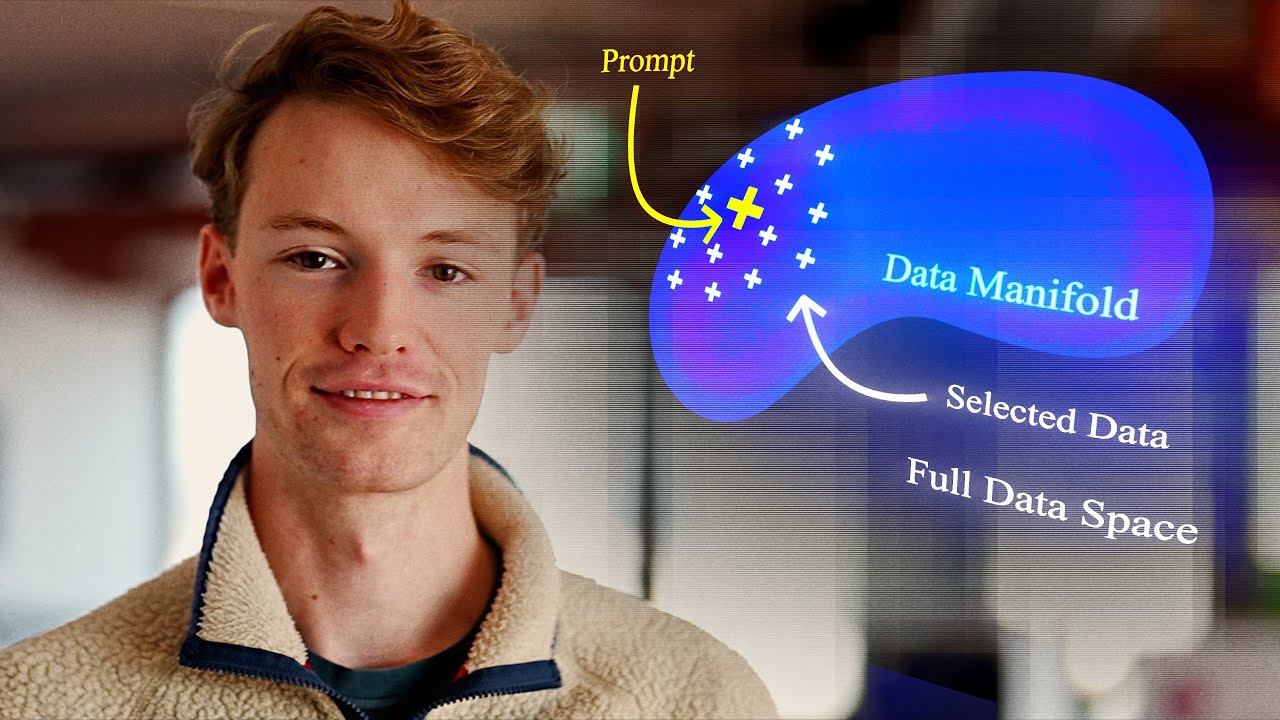

A key aspect of the discussion is the idea of local models, where a separate model is trained for each prediction based on relevant data from a memory. This approach allows for better fitting of complex functions without increasing the overall model size. The speaker highlights the importance of representations and abstractions in making accurate predictions, suggesting that effective data selection from memory is crucial for successful local learning. The video also touches on the limitations of traditional methods, such as K-nearest neighbors, which may retrieve redundant data rather than informative data.

The speaker introduces a new data selection method called SIFT, which aims to select non-redundant data that maximally reduces uncertainty about the model’s predictions. This method involves estimating uncertainty and minimizing it by selecting data that provides the most informative responses. The speaker explains that this approach has shown significant improvements over traditional nearest neighbor retrieval methods, particularly in scenarios where redundancy in data can lead to poor performance. The effectiveness of SIFT is demonstrated through various experiments, highlighting its robustness across different subdomains of natural language.

In conclusion, the speaker emphasizes the potential for continuous learning and adaptation in LLMs, suggesting that these models should not be static but should evolve over time as they encounter new data and tasks. The discussion points to the importance of balancing exploration and exploitation in data selection, drawing parallels to concepts in reinforcement learning. The video wraps up with a call for further exploration of these ideas, particularly in the context of developing open-ended systems that can learn and improve their representations over time.