The video reveals that large language models do not actually think or reason step-by-step as humans do; their verbalized “chain of thought” processes are superficial and engineered outputs rather than true internal reasoning. Instead, models rely on parallel, heuristic shortcuts within their neural networks, making their apparent reasoning more about pattern matching than genuine understanding, and true superintelligence would require developing metacognitive abilities.

The video begins by challenging the common perception that large language models (LLMs) think step-by-step or reason in a human-like manner. It explains that recent research has shown the intermediate reasoning processes generated by these models—often called “chain of thought”—are not actually how the models arrive at their answers. Instead, these verbalized reasoning steps are engineered outputs that do not reflect the true internal mechanics of the AI. This revelation suggests that the models’ apparent reasoning might be more superficial than previously believed, complicating efforts to surpass human intelligence with current AI architectures.

The speaker discusses how recent studies have demonstrated that replacing the explicit verbal reasoning process with meaningless tokens or pause tokens can still yield similar or even improved performance in reasoning tasks. This indicates that the logical coherence of the reasoning process is not essential for the model’s success. Historically, models like Claude achieved high reasoning capabilities without relying on test-time compute or verbalized reasoning, implying that the internal reasoning might be happening outside the scope of what we currently measure or observe during inference.

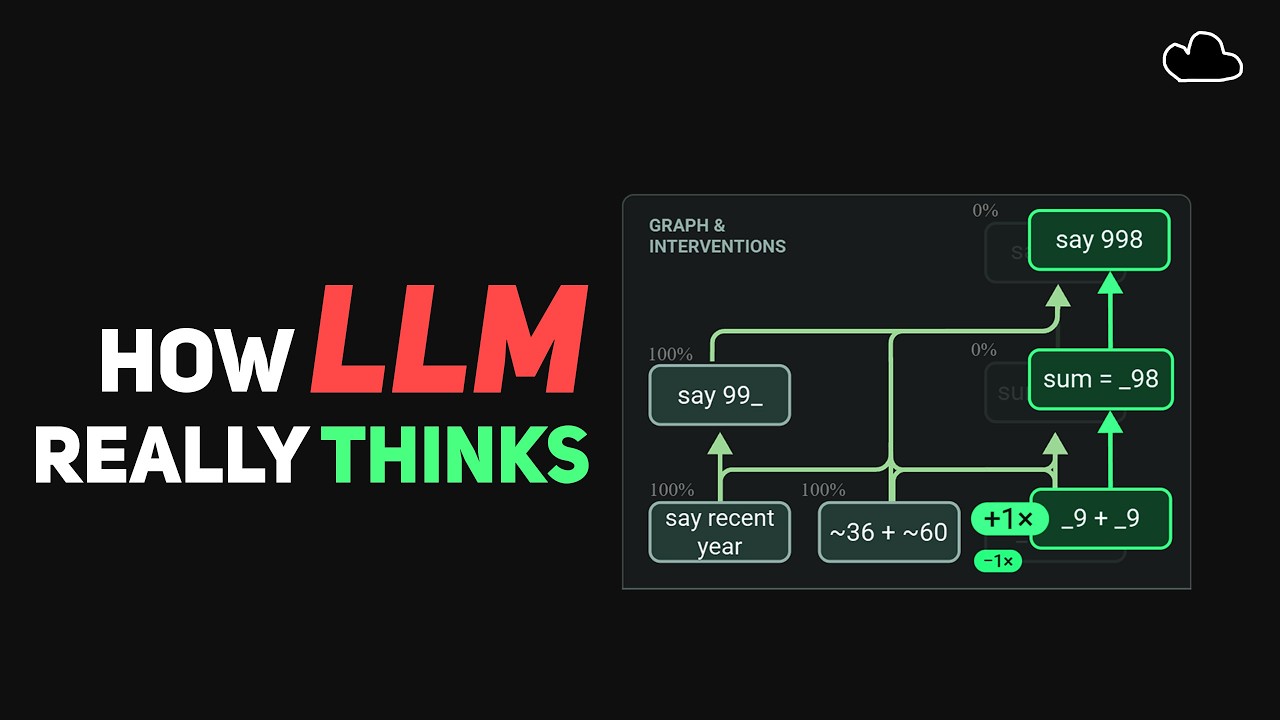

Further, the video explores how recent research using circuit tracing techniques reveals that models do not perform reasoning step-by-step in a human-like manner. Instead, different parts of the neural network activate simultaneously, each handling different aspects of a problem—such as focusing on last digits or estimating the size of an answer—then combining these shortcuts to produce a final result. When asked to explain their reasoning, models generate plausible but often inaccurate sequential explanations, which do not match their actual internal processes. This disconnect underscores that the models’ internal reasoning is fundamentally different from the coherent explanations they produce.

The speaker emphasizes that the true reasoning process in LLMs is still poorly understood. While some research suggests that models can learn to perform implicit reasoning internally without verbalizing it at test time, current architectures lack the metacognitive abilities necessary for genuine introspection or self-awareness. The recent entropic research on neural activation patterns shows that models solve problems through parallel, heuristic-driven shortcuts rather than step-by-step logic, further distancing their internal processes from human reasoning.

In conclusion, the video argues that current AI models are unlikely to surpass human intelligence without developing true metacognitive capabilities. They are effectively modeling human knowledge and reasoning to a degree, but lack the self-awareness and introspective reasoning that characterize human thought. The notion that LLMs can “think without thinking” is thus interpreted as models being able to produce reasoning-like outputs without genuinely engaging in step-by-step thought processes. Achieving true superintelligence would require significant advances in enabling models to think more like humans, including metacognition and self-reflection.