In an interview, Anil Ananthaswamy discusses the mathematical foundations of machine learning, emphasizing the importance of understanding concepts like calculus, linear algebra, and the bias-variance trade-off for a broader audience, including journalists and policymakers. He highlights the elegance of machine learning algorithms, the significance of self-supervised learning, and the philosophical implications of AI, advocating for a deeper comprehension of these principles to address societal impacts and ethical considerations.

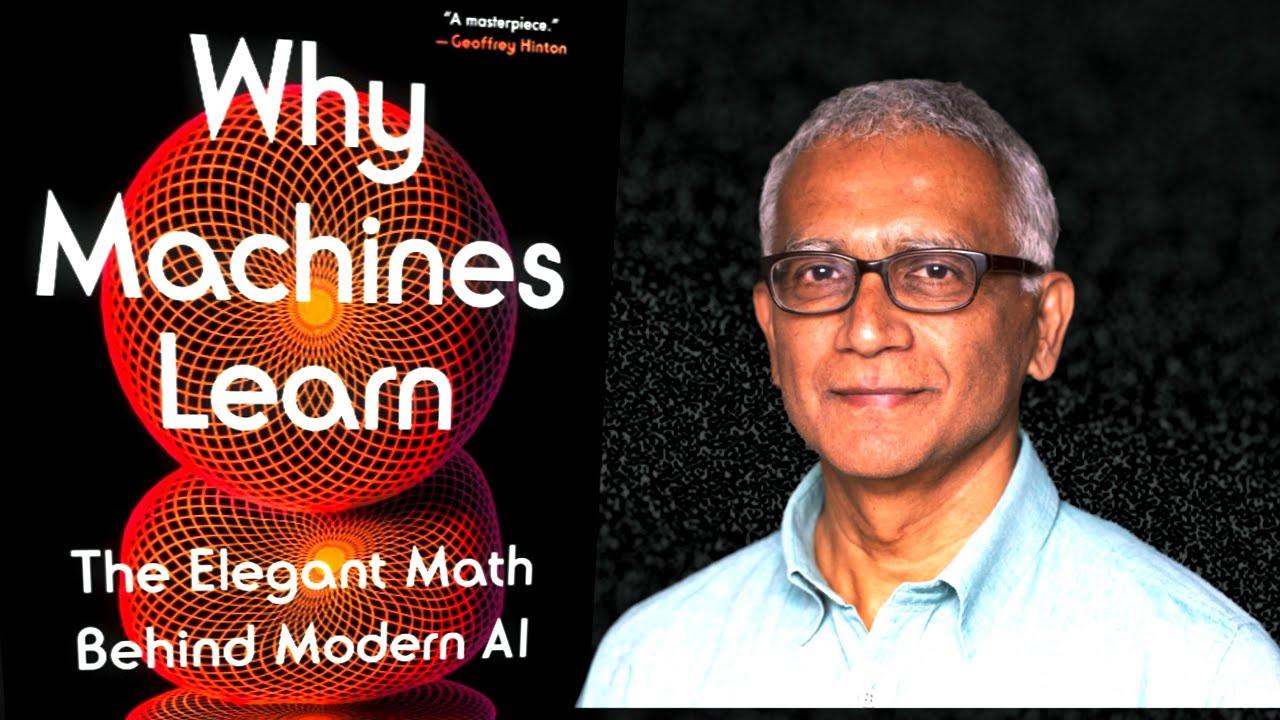

The video features an interview with Anil Ananthaswamy, author of “Why Machines Learn,” where he discusses the mathematical foundations of machine learning and the importance of understanding these concepts for a broader audience. Ananthaswamy emphasizes that humans learn patterns through experience without needing explicit labels, suggesting that AI systems can also learn effectively without human intervention. He advocates for more people, including journalists and policymakers, to engage with the mathematics behind AI to better understand its capabilities and limitations.

Ananthaswamy shares his background as a science journalist and his journey into the world of machine learning. He highlights the elegance of the mathematics involved, particularly in algorithms like the perceptron convergence theorem and kernel methods. He believes that understanding these mathematical principles is crucial for grasping how machines learn and for recognizing the differences between human reasoning and machine pattern matching. The conversation also touches on the historical context of machine learning, noting that the field has evolved significantly since the early days of neural networks.

The discussion delves into the essential mathematical disciplines for understanding machine learning, including calculus, linear algebra, probability, and optimization theory. Ananthaswamy points out that while modern AI advancements often appear empirical, a solid grasp of the underlying mathematics is necessary to comprehend the strengths and limitations of these systems. He also discusses the bias-variance trade-off, emphasizing the importance of finding a balance between model complexity and generalization to avoid overfitting.

Ananthaswamy addresses the concept of self-supervised learning, exemplified by models like ChatGPT, which learn from data without human labeling. He argues that this approach is crucial for scaling AI systems, as it allows for the analysis of vast datasets without the need for costly human annotation. He believes that the future of AI will lean more towards unsupervised learning, reflecting how humans learn from their environment without explicit guidance.

Finally, the interview touches on the philosophical implications of AI, including questions of agency, reasoning, and intelligence. Ananthaswamy asserts that while AI systems can exhibit behaviors that seem intelligent, they fundamentally operate as sophisticated pattern matchers rather than true reasoning entities. He concludes by highlighting the need for a deeper understanding of the mathematical foundations of AI to navigate its societal impacts, including biases and ethical considerations, as well as the potential for future advancements in the field.