A recent study by LAION revealed that even advanced AI language models, such as OpenAI’s GPT-4 and Google’s Gemini, struggle with a basic logic question known as the “Alice in Wonderland” problem. Despite their sophistication, these models often fail to answer correctly, showing flawed reasoning and overconfidence in their incorrect responses. This inconsistency highlights potential flaws in the current benchmarks used to evaluate AI reasoning abilities. The research, which awaits peer review, questions the reliability of these AI systems and suggests a need for reassessment of how AI models are tested and marketed.

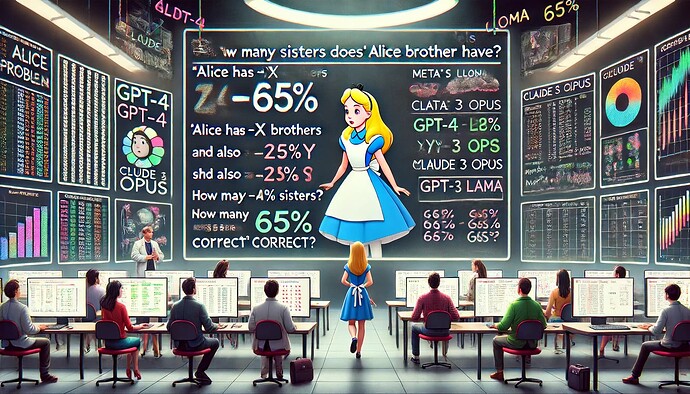

A recent study conducted by researchers at the AI research nonprofit LAION has uncovered significant limitations in even the most advanced AI language models, such as OpenAI’s GPT-4 and Google’s Gemini. The study focused on a deceptively simple logic question known as the “Alice in Wonderland” problem, which asks: “Alice has X brothers and she also has Y sisters. How many sisters does Alice’s brother have?” Despite its straightforward nature, this question stumped several leading AI models, including GPT-3, GPT-4, Claude 3 Opus, Meta’s Llama, and Google’s Gemini. The researchers found that only the new GPT-4o model managed to answer the question correctly nearly 65% of the time, with the next best performer, Claude 3 Opus, achieving only 43%.

The Alice in Wonderland problem requires basic reasoning skills that are easily solved by humans, yet many AI models struggled to provide correct answers, often resorting to bizarre and illogical explanations. For instance, when Meta’s Llama 3 was presented with the simple version of the problem, it incorrectly concluded that each of Alice’s brothers had just one sister, despite the correct answer being two. Similarly, Claude 3 Opus, when given a more complex version of the problem, confidently asserted that Alice’s brother had four sisters, ignoring the fundamental fact that Alice’s brother shares the same sisters as Alice. These responses not only showcased the models’ inability to solve a simple logic problem but also highlighted their tendency to be overconfident in their incorrect answers, often backing them up with nonsensical justifications.

The study’s findings also raise concerns about the benchmarks currently used to evaluate AI reasoning and problem-solving capabilities. While the AI models performed well on standardized tests like the Multi-task Language Understanding (MMLU), which are designed to measure a model’s ability to solve various problems, their performance on the Alice in Wonderland problem was alarmingly poor. For instance, GPT-4o scored 88% on the MMLU, yet struggled with basic logical reasoning in the Alice problem. This discrepancy suggests that existing benchmarks might not adequately reflect the models’ true reasoning capabilities, leading the researchers to call for a reevaluation of these testing methodologies. The study underscores the need to develop more comprehensive tests that can better assess AI models’ fundamental reasoning skills.

The implications of this research extend beyond academic curiosity, raising questions about the reliability and transparency of AI systems in practical applications. The overconfidence and erroneous reasoning displayed by these models could have serious consequences, particularly in fields requiring precise and logical decision-making. As the AI industry continues to advance, the study highlights the critical need for more rigorous testing standards that ensure AI systems are not only proficient in complex tasks but also capable of basic logical reasoning. This could help mitigate risks associated with deploying AI technologies that, despite appearing sophisticated, may still have significant gaps in fundamental reasoning abilities. The findings prompt a broader discussion on the future direction of AI research and the development of robust, reliable AI systems.