The video explores the limitations of current AI computing technologies, particularly traditional GPUs, which are energy-intensive and inefficient for large-scale models due to their inability to handle sparse computations effectively. It advocates for the adoption of neuromorphic chips that mimic the brain’s architecture, promising significantly improved efficiency and reduced energy consumption for future AI applications.

The video discusses the evolution of artificial intelligence (AI) and the limitations of current computing technologies, particularly focusing on the inefficiencies of traditional chips like GPUs. It begins by explaining how AI models, such as ChatGPT and image generators, are based on neural networks that mimic the human brain’s structure. These networks consist of interconnected nodes that process information, but as AI models grow in size and complexity, the computational demands increase significantly, leading to inefficiencies in training and inference.

The video highlights the challenges posed by current GPU technology, which, while effective for parallel processing, is energy-intensive and struggles with memory limitations. For instance, training large models like GPT-4 requires immense power, comparable to the energy consumption of thousands of homes. Additionally, the separation between CPU and GPU memory creates latency issues, hindering performance. The video emphasizes that as AI models scale up, the existing hardware becomes unsustainable due to high energy consumption and environmental concerns.

Another critical point raised is the inefficiency of GPUs in handling sparse computations, which are common in AI tasks. Unlike the human brain, which efficiently filters out irrelevant information, GPUs process all data, leading to wasted calculations. This inefficiency is particularly problematic for real-time applications, such as autonomous systems. The video suggests that to create more efficient AI systems, a fundamental change in computing hardware is necessary, moving away from traditional architectures.

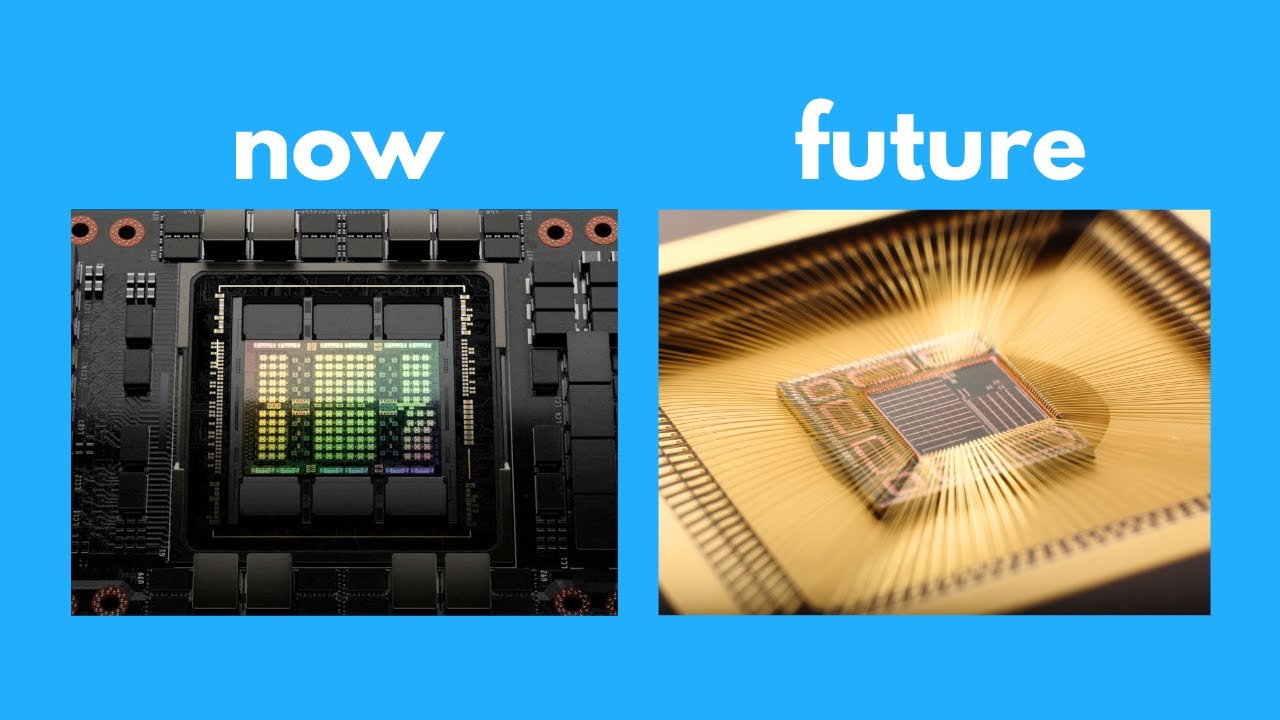

The discussion then shifts to neuromorphic chips, which are designed to mimic the brain’s structure and function more closely. These chips integrate processing and memory, allowing for more efficient data handling and lower power consumption. Neuromorphic chips utilize materials like memristors and quantum materials to create artificial neurons that can process and store information simultaneously, enhancing parallelism and energy efficiency. The video outlines several examples of neuromorphic chips, such as IBM’s True North and Intel’s Loihi, which demonstrate the potential for more sustainable AI computing.

In conclusion, the video posits that neuromorphic chips could be the future of AI computing, addressing the limitations of current technologies. By adopting designs that resemble the brain’s architecture, these chips promise to improve efficiency and reduce energy consumption significantly. The video encourages viewers to consider the implications of these advancements and invites discussion on the potential of neuromorphic computing to revolutionize AI.