The video explains TiDAR, a method that accelerates large language model inference by using otherwise idle GPU resources to generate parallel token drafts via diffusion, which are then verified by the main autoregressive model—achieving faster text generation without sacrificing quality. Unlike previous approaches, TiDAR maintains output accuracy and efficiency, delivering up to 4–6x speedups while matching the performance of standard autoregressive models.

The video analyzes the TiDAR paper, “Think in Diffusion, Talk in Autoregression,” by NVIDIA researchers. The core idea is that during large language model (LLM) inference, GPUs are often underutilized due to memory bottlenecks. TiDAR proposes a method to exploit this unused GPU capacity to accelerate inference without sacrificing the quality of output, unlike previous approaches such as speculative decoding or block diffusion, which typically involve trade-offs in accuracy or efficiency. The method is described as a “free lunch” because it leverages otherwise idle computational resources, only costing extra electricity.

The paper contrasts two main types of language models: autoregressive models (like GPT), which generate text one token at a time based on the prefix, and diffusion language models, which can generate multiple tokens in parallel but only capture marginal distributions, leading to lower quality outputs. Autoregressive models are slower but produce higher-quality, more coherent text, while diffusion models are faster but less accurate due to the lack of interaction between generated tokens. The challenge is to combine the strengths of both approaches—maintaining the quality of autoregressive models while achieving the speed of diffusion models.

Speculative decoding is introduced as a related technique, where a smaller, faster model proposes future tokens, and the larger model checks these proposals in parallel. However, this approach is only effective if the smaller model’s predictions are accurate; otherwise, the computational gains are negated. TiDAR improves on this by using the extra GPU capacity to generate “drafts” of future tokens using diffusion, which are then checked by the autoregressive model. This process allows for parallel computation of multiple possible futures, increasing throughput without compromising output quality.

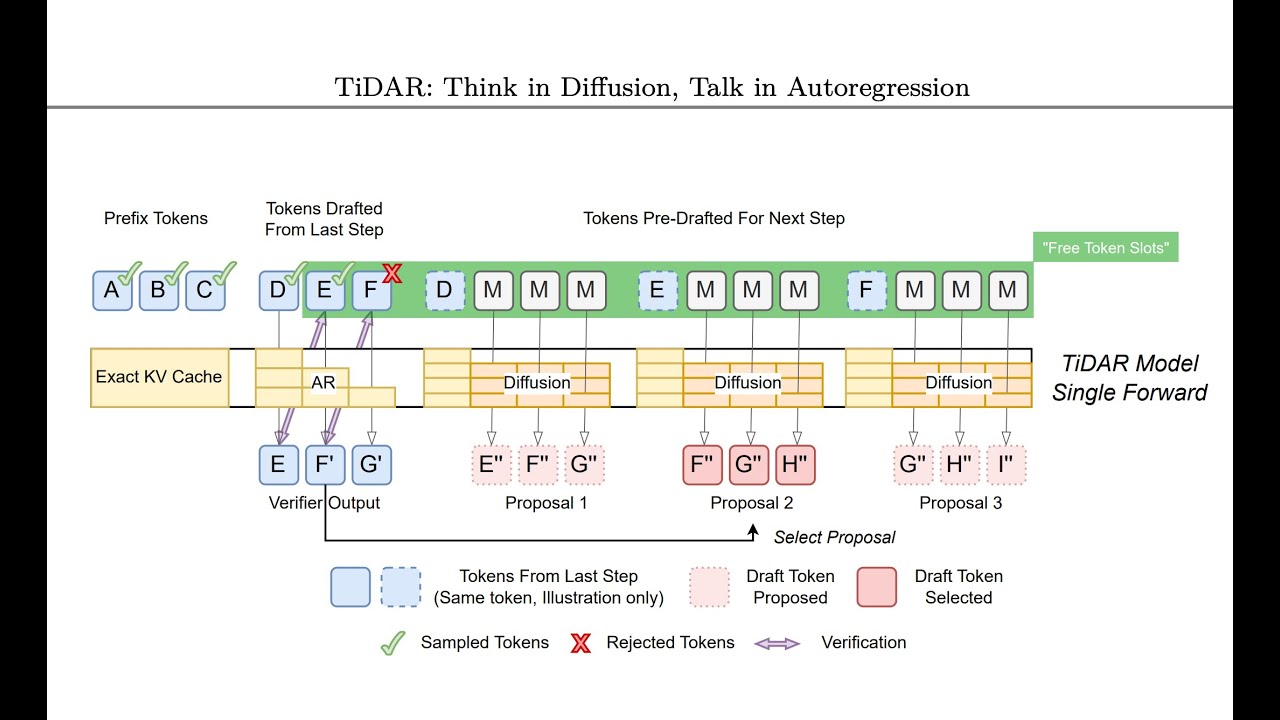

The TiDAR architecture partitions tokens into three sections at each generation step: prefix tokens, tokens proposed in the previous step, and tokens pre-drafted for the next step. It uses rejection sampling to ensure that the final output matches what would have been produced by pure autoregressive sampling. If a draft is rejected, TiDAR can precompute drafts for all possible outcomes, ensuring that the next step always has a valid proposal ready. This is achieved through smart attention masking and efficient use of the KV cache, allowing all necessary computations to be performed in a single forward pass.

Experimentally, TiDAR demonstrates significant speedups—up to 4–6 times more tokens per second—while matching the quality of standard autoregressive models. It outperforms both speculative decoding and pure diffusion models in terms of efficiency and output quality. The only minor trade-off is the need to train the model with an auxiliary diffusion loss in addition to the standard next-token prediction loss, but this does not appear to harm performance. Overall, TiDAR represents a clever and practical advance in LLM inference, making better use of available hardware resources to deliver faster, high-quality text generation.