The video analyzes the “Titans: Learning to Memorize at Test Time” paper, highlighting its novel approach of using a neural network as a dynamic memory updated via gradient-based learning during inference to overcome transformer context window limitations. While expressing some skepticism about the paper’s novelty claims, the presenter acknowledges that this method of learning to memorize at test time is a promising direction for improving long-sequence modeling.

The video analyzes the paper “Titans: Learning to Memorize at Test Time” by Google Research, which addresses the challenge of extending the effective context window of transformer models. Traditional transformers are limited by a fixed context window size, making it difficult to process very long sequences such as lengthy texts or videos. The paper proposes an architecture that allows the model to memorize information during test time, effectively overcoming these limitations by using a memory mechanism that can recall information from earlier parts of the sequence beyond the immediate context window.

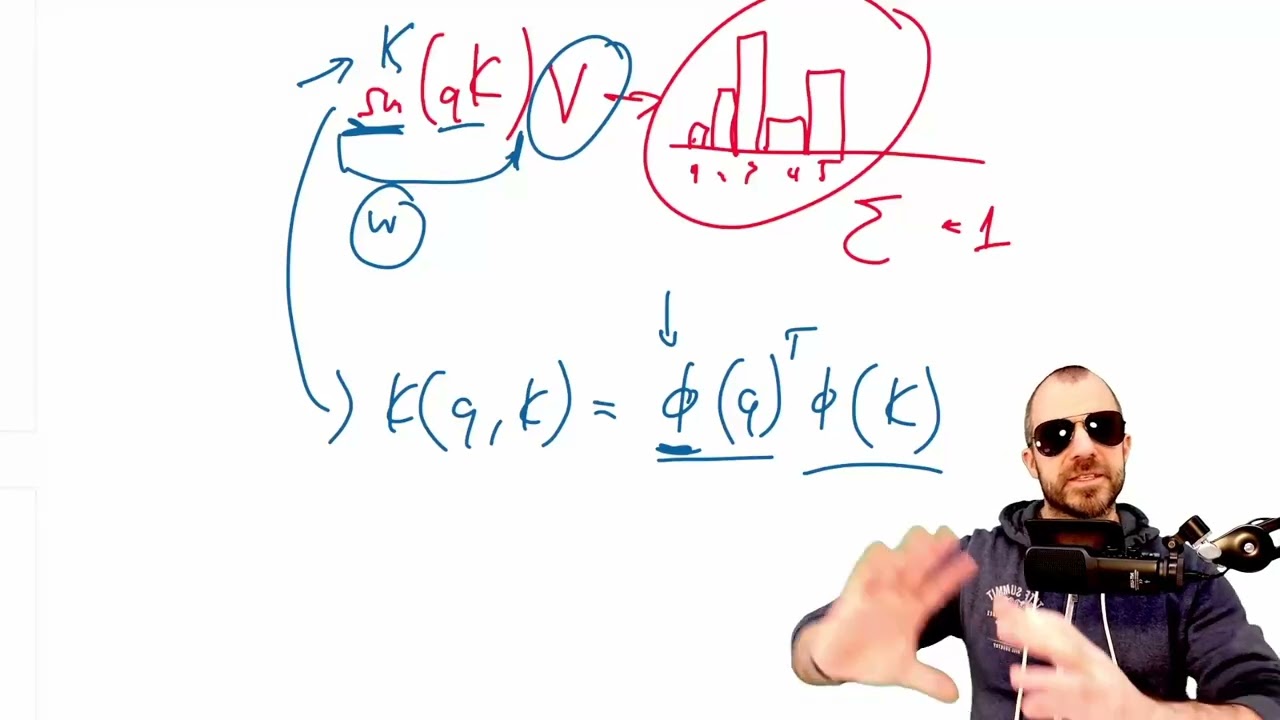

The presenter explains that previous approaches to handling long contexts, such as Transformer-XL and linear transformers, have attempted to incorporate memory by compressing past information into vector or matrix-valued states. However, these methods face challenges because compressing a long history into a fixed-size memory often results in loss of information. Linear transformers, which approximate the attention mechanism using kernel methods to reduce computational complexity, tend to perform poorly because they effectively behave like recurrent networks compressing all past data into a single state, which is insufficient for very long contexts.

The key innovation in the Titans paper is the idea of using a neural network itself as the memory, rather than a fixed vector or matrix. This neural network memory is updated continuously during test time through gradient-based learning, allowing it to adapt and memorize relevant information dynamically as the sequence is processed. The memory network takes queries as input and outputs relevant stored information, enabling the model to retrieve data from distant past tokens that are no longer in the immediate context window. This approach reframes memory as a learnable function rather than a static data structure.

The training of this neural network memory is done by minimizing a loss function that encourages the memory to associate keys with their corresponding values, similar to how attention works. The paper frames this learning process as being driven by “surprise,” where the memory updates more strongly when encountering unexpected or novel information. Although this is presented as a novel, human-inspired concept, the presenter points out that it essentially corresponds to standard gradient descent with momentum, and the framing may be more marketing than a fundamentally new insight.

In conclusion, while the presenter expresses some skepticism about the novelty claims and marketing language used in the paper, they acknowledge that the core idea of learning to memorize at test time is a promising and necessary direction for future models. Extending context windows by actively learning and updating memory during inference could be crucial for handling real-world tasks involving very long sequences. Despite some criticisms, the paper offers a valuable contribution and demonstrates competitive performance, making it an interesting development in the field of long-context modeling.