The video explains the paper “TokenFormer,” which presents a new Transformer architecture that treats model parameters as tokens to allow for flexible scaling without complete retraining. While the approach offers potential advantages, the presenter expresses skepticism about its novelty and raises concerns regarding the experimental results and comparisons with traditional Transformers.

The video discusses the paper “TokenFormer: Rethinking Transformer Scaling with Tokenized Model Parameters,” a collaboration involving researchers from MOX, the Plunk Institute for Informatics, Google, and Peking University. The paper introduces a new architecture called TokenFormer, which modifies the traditional Transformer model by treating model parameters as tokens. This approach aims to provide greater flexibility in scaling Transformers, allowing for the addition of parameters to an already trained model without the need for complete retraining. The presenter expresses skepticism about the novelty of the idea, suggesting that it may be more about rebranding existing concepts than introducing fundamentally new techniques.

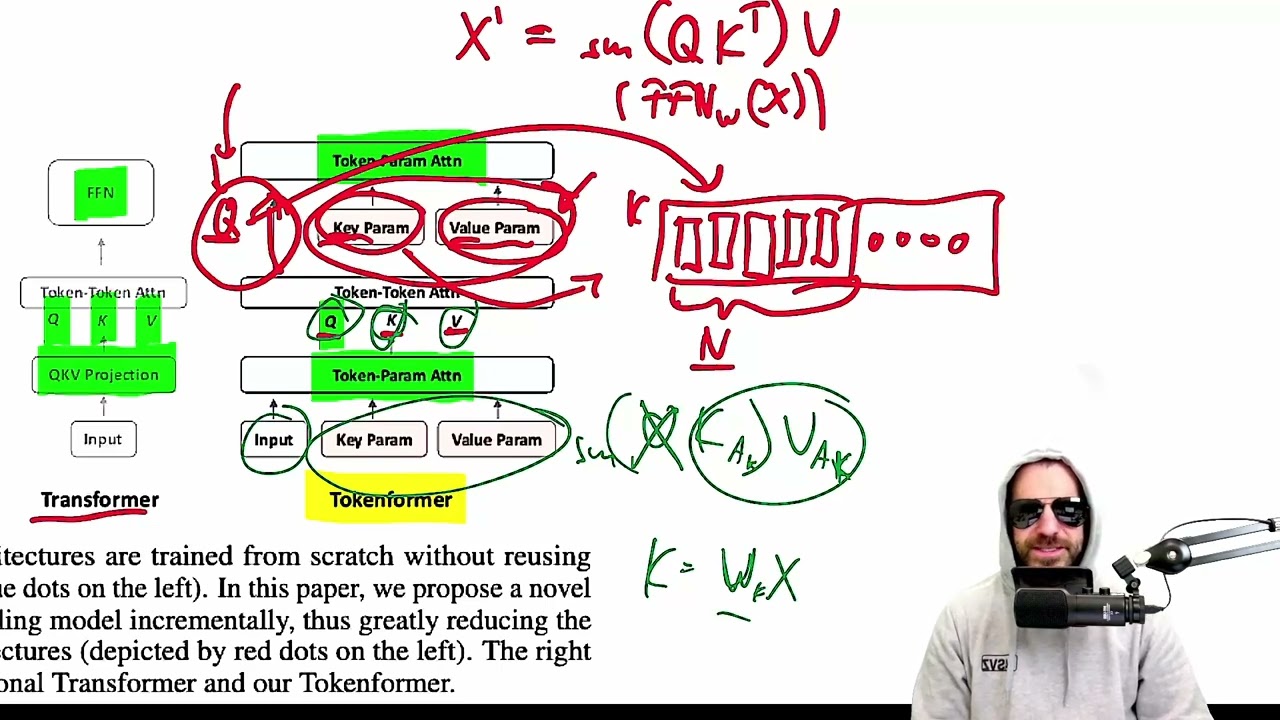

The authors of the paper highlight the challenges associated with scaling Transformer models, particularly the substantial computational costs and the requirement to retrain from scratch when modifying the number of parameters. TokenFormer seeks to address these issues by leveraging attention mechanisms not only for input tokens but also for interactions between tokens and model parameters. This shift allows for the possibility of adding new parameters to the model while maintaining the integrity of the existing architecture, thus avoiding the need for complete retraining.

In the traditional Transformer architecture, interactions between tokens and model parameters occur through linear projections. TokenFormer replaces these linear interactions with attention mechanisms, enabling the model to process additional parameters as tokens. The presenter explains that this change allows for the integration of an arbitrary number of parameters without altering the input or output dimensions, as long as the new parameters are initialized appropriately (e.g., with zeros). This flexibility is touted as a significant advantage of the TokenFormer architecture.

Despite the potential benefits, the presenter raises concerns about the experimental results presented in the paper. They note discrepancies in the training token counts and question the validity of the comparisons made between the TokenFormer and traditional Transformers. The presenter points out that the TokenFormer models, which have been trained with additional tokens, sometimes perform worse than their traditional counterparts, despite having processed more data. This raises questions about the effectiveness of the proposed method and its practical implications.

Ultimately, the presenter concludes that while the TokenFormer architecture introduces a new way of thinking about parameter scaling in Transformers, it may not be as groundbreaking as claimed. They argue that the core idea of adding parameters and modifying the model’s capacity has been explored in previous research. The video emphasizes the importance of critically evaluating new methodologies and encourages viewers to consider the broader context of advancements in Transformer architectures.