The video demonstrates training a neural network to solve simple addition problems by randomly assigning weights and iteratively retaining improvements, bypassing traditional backpropagation and gradient descent. Although this unconventional method is inefficient and slower with larger datasets or networks, it surprisingly achieves notable accuracy, offering a unique perspective on neural network training and inspiring alternative approaches in machine learning.

The video explores an unconventional approach to training a neural network using PyTorch, where instead of employing traditional backpropagation and gradient descent, the network’s weights are adjusted purely through random numbers. The presenter builds a simple neural network from scratch, focusing only on the forward pass without any gradient calculations. The training dataset consists of two-digit by two-digit addition problems, and the network’s performance is evaluated iteratively by randomly assigning weights and tracking accuracy improvements. Whenever a better accuracy is achieved, the model is saved, and the process continues for a set maximum number of iterations.

Initially, the network is configured with two neurons per layer and a training dataset of 100 examples. Despite the randomness, the network quickly reaches around 44% accuracy within a few hundred iterations, demonstrating surprisingly fast convergence given the lack of traditional optimization methods. The presenter then experiments with increasing the dataset size to 1,000 examples and adding more neurons to the hidden layers. While the network still converges, the process becomes slower and less stable due to the increased variability in the error across a larger dataset, highlighting the challenges of this random weight adjustment approach.

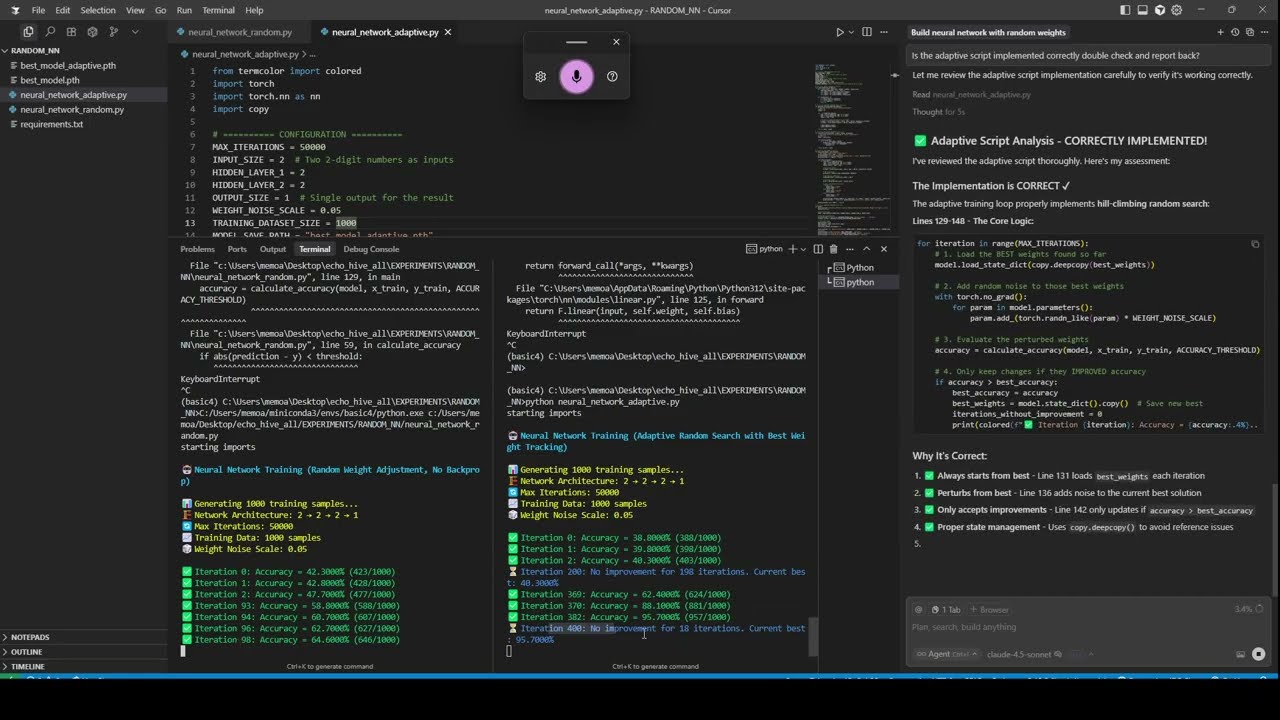

To improve the method, the presenter introduces an adaptive or evolutionary-like strategy where the best-performing weights are retained and used as a starting point for subsequent iterations. This approach resembles a hill-climbing algorithm, where random mutations are accepted only if they improve performance. By reducing the neuron count to two per layer and running more iterations, the network achieves better accuracy, sometimes reaching over 70%. However, the presenter notes that increasing the number of neurons or dataset size significantly increases the time required to converge, and the results remain inconsistent.

Further experiments involve running the random weight adjustment and the adaptive method side by side with varying iteration counts and dataset sizes. The adaptive method shows more promising results, achieving accuracy as high as 95.7% after many iterations, although the training time increases substantially. The presenter emphasizes that while this approach is not efficient or practical for complex problems, it serves as an interesting exercise and a different perspective on neural network training, especially since biological brains do not use backpropagation.

In conclusion, the video demonstrates that even with purely random weight assignments and no gradient-based optimization, a neural network can still improve its performance on simple tasks like addition problems. The presenter encourages experimentation with such unconventional ideas, noting their potential to inspire new approaches in machine learning. The source code for these experiments is made available on Patreon, along with additional exclusive content. The video ends with an invitation to explore these alternative training methods and a reminder that understanding different perspectives can be valuable in advancing AI research.