The paper “Feedback Attention is Working Memory” introduces a feedback attention mechanism for Transformers to enhance working memory capacity, inspired by neuroscience concepts. By incorporating memory tokens and allowing each token to attend to previous memory, the model aims to store and retain information over multiple blocks, showing improvements in certain tasks but also highlighting trade-offs between memory capacity and performance.

The paper “Feedback Attention is Working Memory” introduces the concept of feedback attention to Transformers in order to incorporate the idea of working memory from Neuroscience. Working memory, a short-term memory system, allows for temporary storage of information to perform tasks. The paper aims to extend the short-term memory capacity of Transformers by incorporating feedback attention. The authors draw parallels between working memory in the human brain, sustained by prefrontal cortical thalamic loops, and the proposed feedback attention mechanism in Transformers. The main goal is to create a system where each layer of the Transformer can retain and update information, resembling the continuous spiking activation within a feedback loop in the brain.

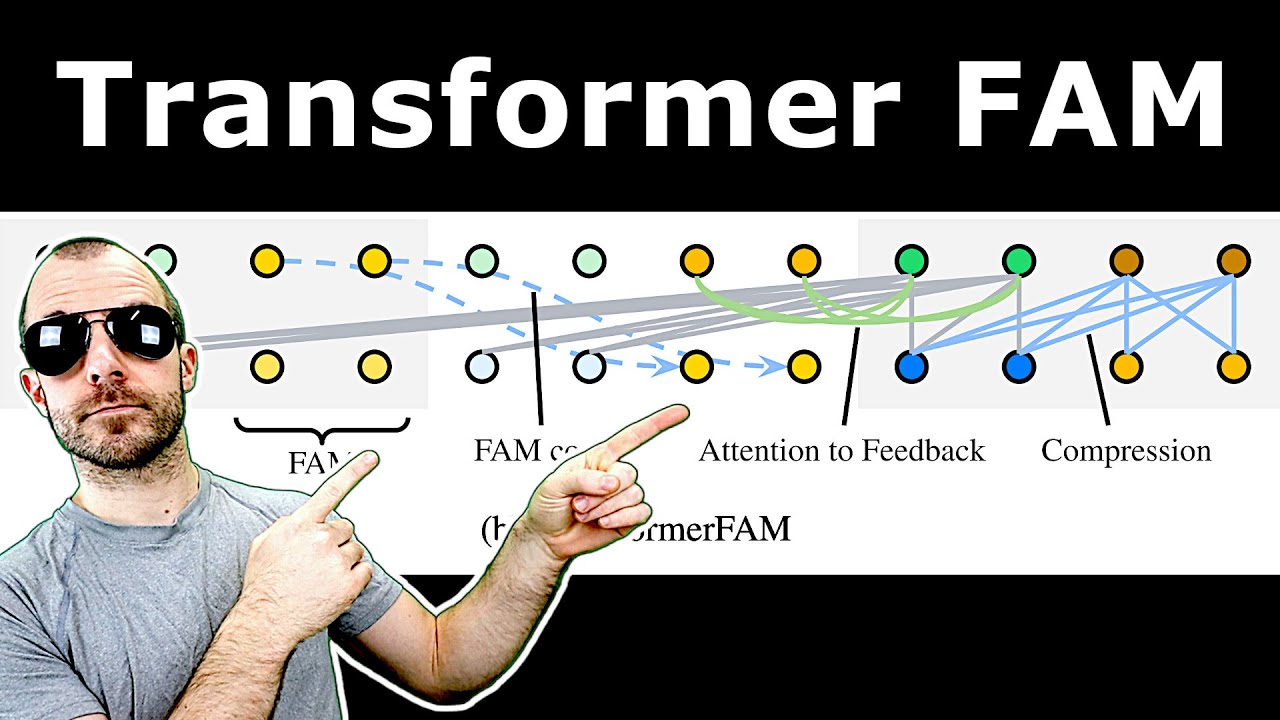

The researchers propose a feedback attention mechanism that involves adding special memory tokens to each block of tokens processed by the Transformer. These memory tokens are meant to retain and aggregate information from the current block and the memory of the previous block. By allowing each token to attend not only to the current block but also to the memory tokens, the model aims to store and retain information over multiple blocks. This approach is intended to extend the context length of the Transformer and enhance its ability to retain important information for longer periods.

In their experiments, the authors compare the performance of their feedback attention mechanism with blockwise self-attention, showing improvements in certain tasks. They introduce multiple memory tokens to increase the memory capacity of the model but find that performance saturates and declines when the memory length exceeds a certain threshold. The paper highlights the trade-offs between memory capacity and performance, suggesting that limited memory space can be beneficial for information compression. The discussion also touches on the necessity of allowing gradient flow through the memory segment for the model to learn to retain important information effectively.

While the feedback attention mechanism shows promising results in some benchmarks, the authors acknowledge that there are limitations and areas for improvement. They emphasize the importance of refining the architecture of feedback loops in Transformers and exploring the transfer of working memory to long-term memory. Additionally, they provide insights into techniques for extending context at inference time and share unsuccessful attempts in creating feedback loops. The paper concludes by calling for further research to delve deeper into integrating attention-based working memory concepts into deep learning and addressing the challenges associated with feedback attention architectures. The authors aim to spark interest in exploring and developing new approaches to enhance memory mechanisms in Transformers.