The video focuses on running various open-source large language models (LLMs) on the Raspberry Pi 5, demonstrating the installation process, model performance comparisons, and showcasing the device’s potential for tasks like text generation and image interpretation. While smaller models like “tiny llama” provide faster responses on the Raspberry Pi 5, larger models like “llama 2” show slower evaluation rates, highlighting the trade-off between model size and processing speed.

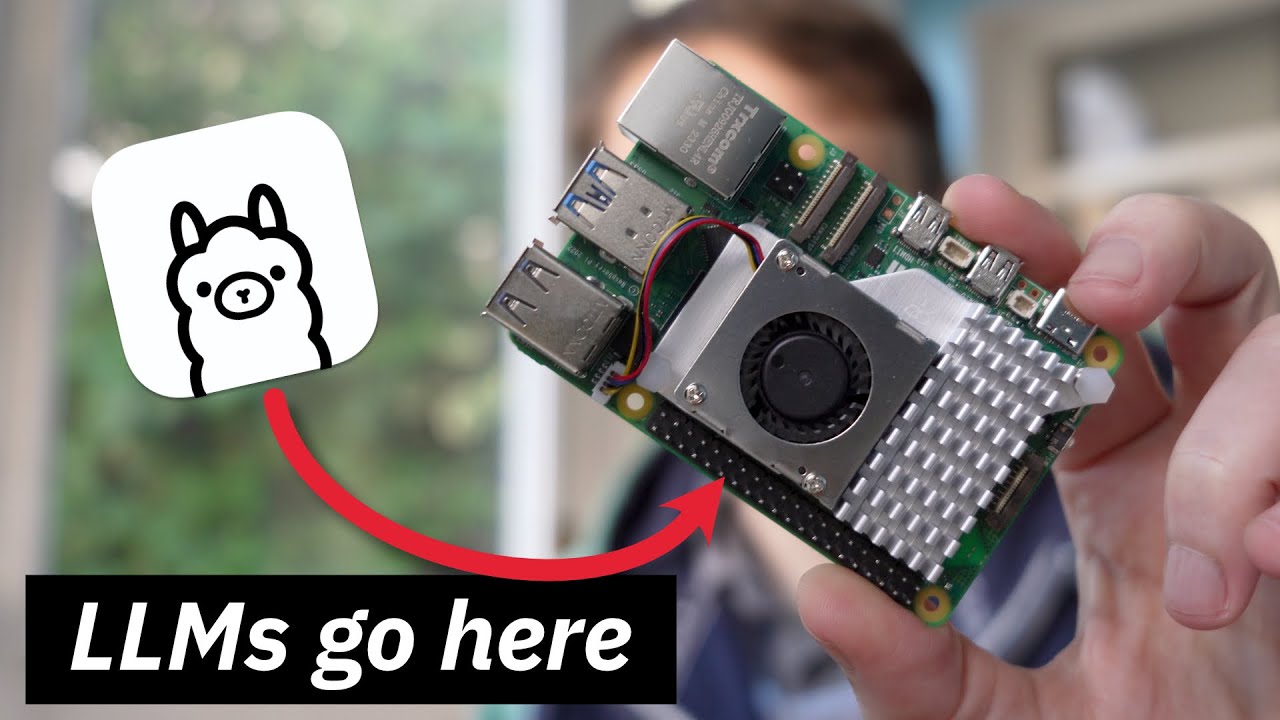

The Raspberry Pi 5, a popular tiny computer, is equipped with 8 GB of RAM and is affordable, making it a versatile tool for various applications. In this video, the focus is on running an open source large language model (LLM) on the Raspberry Pi 5 to compare performance with other devices like the MacBook Pro. The installation process of the LLM called “tiny llama” is demonstrated, and basic prompts are tested to gauge the model’s response time. The tiny llama model runs smoothly on the Raspberry Pi 5, providing satisfactory evaluation rates.

The video creator then proceeds to download and test the performance of the “llama 2” and " uncensored llama 2" models on the Raspberry Pi 5. The uncensored llama 2 model shows slower response times compared to the tiny llama model, likely due to its larger size and increased parameter complexity. The need for a balance between model size and computational efficiency is highlighted, with smaller models like “tiny llama” preferred for faster responses on the Raspberry Pi 5.

Furthermore, the video demonstrates the image interpretation capabilities of the LLM on the Raspberry Pi 5 by running the model on a downloaded image of a Raspberry Pi circuit board. Despite accurately describing the image’s content locally without third-party services, the process is notably slow, taking over five minutes. The text output from the image analysis showcases the LLM’s ability to provide detailed descriptions of visual content.

The video concludes by emphasizing the impressive capabilities of the LLM on the Raspberry Pi 5, such as local image analysis and responsive text generation. However, the trade-off between model size and processing speed is evident, with larger models like “llama 2” showing slower evaluation rates compared to smaller models. Viewers are encouraged to explore running LLMs on their Raspberry Pi 5 devices and can refer to related videos for additional information on advanced features like API integration.

Overall, the video serves as a practical guide for users interested in leveraging the Raspberry Pi 5’s computing power to run LLMs locally. It demonstrates the installation process, model performance comparisons, and showcases the Raspberry Pi 5’s potential for tasks like text generation and image interpretation. While performance may vary based on the model size and complexity, the Raspberry Pi 5 proves to be a capable platform for experimenting with LLMs and other AI applications.