The text explains how transformers, key components in models like GPT-3, use attention mechanisms to focus on relevant parts of input sequences and update token embeddings to capture contextual meaning. By processing data with multiple attention heads in parallel, transformers can efficiently learn diverse contextual relationships, enabling them to encode higher-level ideas and revolutionize natural language processing.

In the previous chapter, we delved into the internal workings of a transformer, a key technology in large language models like GPT-3. The transformer model aims to predict the next word in a text by associating each token with a high-dimensional vector known as its embedding. These embeddings are adjusted throughout the transformer to capture richer contextual meaning rather than just individual word representations. The attention mechanism is a crucial component of a transformer, allowing the model to focus on relevant parts of the input sequence.

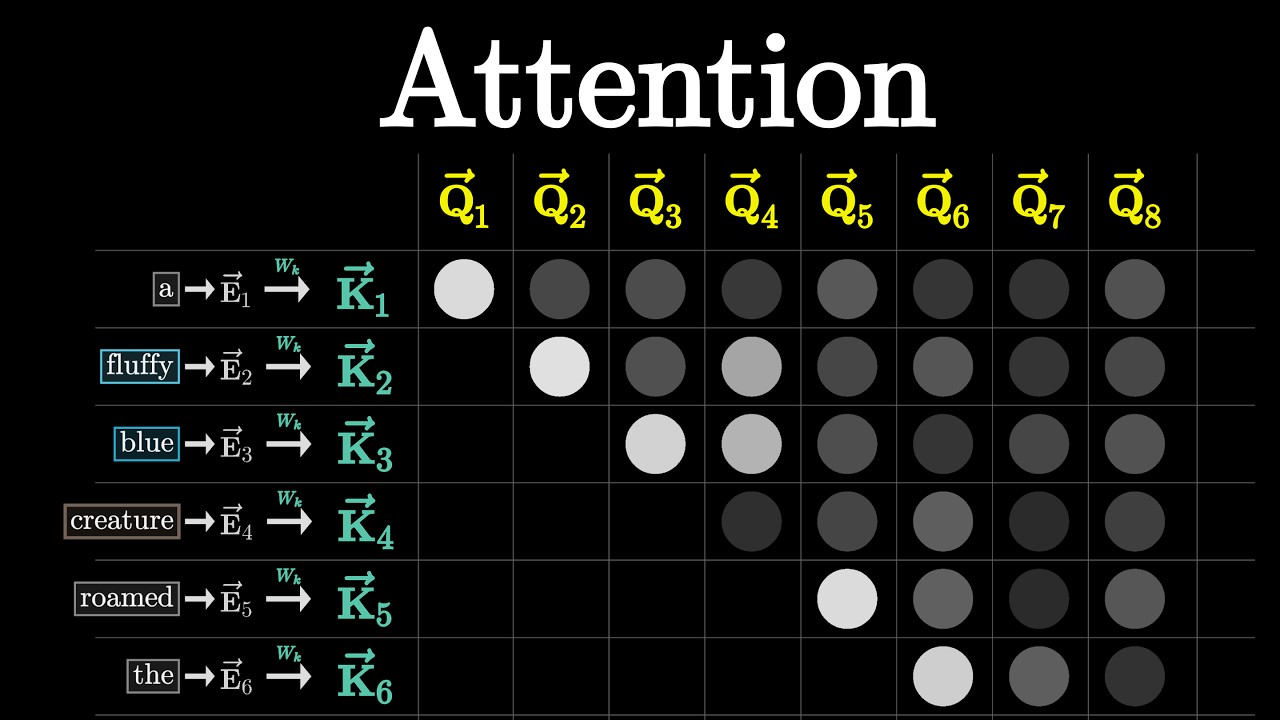

The attention mechanism processes data by assigning each token an embedding and then calculating attention scores between tokens based on query, key, and value matrices. These scores determine the relevance of each token in updating the embeddings of others. The attention mechanism enables contextual updates, such as adjectives adjusting the meanings of nouns, by weighting the contributions of different tokens. The patterns generated by attention scores guide the model in refining token embeddings to capture contextual nuances.

Within a transformer, attention is typically implemented using multiple attention heads running in parallel. Each attention head has its own set of query, key, and value matrices, allowing the model to capture diverse contextual relationships. The multi-headed attention mechanism enhances the model’s capacity to learn various ways in which context influences word meanings. By running data through multiple layers of attention blocks and other operations, the model can encode higher-level and abstract ideas beyond simple word associations.

The attention mechanism is highly parallelizable, making it efficient to run computations on GPUs and enabling scalability in model performance. The success of the attention mechanism lies not only in its ability to capture contextual dependencies but also in its parallel processing capabilities. The transformer architecture, with its attention mechanism and multi-layer perceptrons, has revolutionized natural language processing due to its ability to handle large-scale data and tasks effectively. Understanding the intricacies of attention in transformers is essential for grasping the functioning and performance of modern AI models.