The video presents the Continuous Thought Machine (CTM), an AI model inspired by biological brains that processes information over multiple time steps using an internal clock and neuron-level learners, enabling it to solve complex tasks like 2D mazes by building internal spatial representations and dynamically focusing attention. It also discusses privacy concerns in AI data collection, introducing the Delete Me service for personal data protection, and highlights CTM’s innovative synchronization mechanism that captures temporal relationships to improve predictions through iterative thought processes.

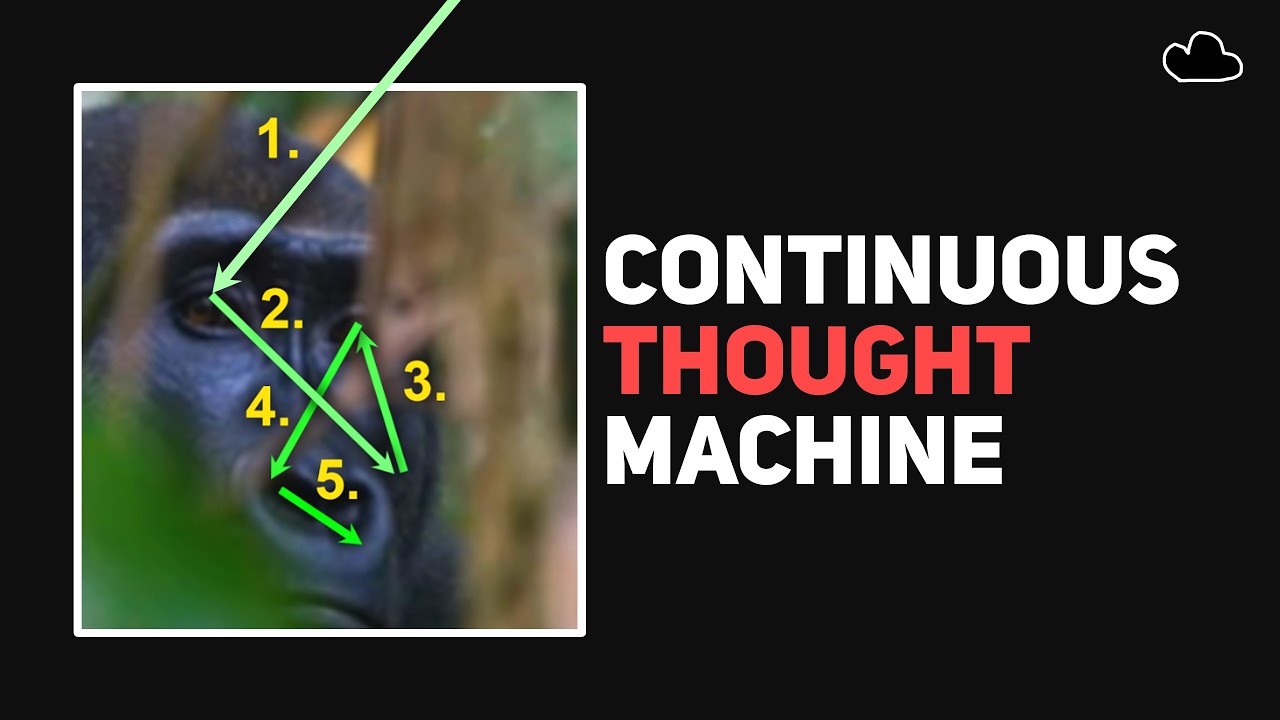

The video introduces a groundbreaking AI model called the Continuous Thought Machine (CTM), developed by Sakana AI, which is inspired by biological brain processes. Unlike current state-of-the-art AI models that struggle with perceiving time, CTM incorporates an internal clock mechanism that allows it to process information over multiple time steps. This temporal awareness enables the model to perform complex tasks such as solving 2D mazes directly from raw images without positional hints, demonstrating an ability to build internal spatial representations and generalize to larger scales. The model’s attention mechanism mimics human-like focus, examining different parts of an image over time, and its accuracy improves the longer it “thinks.”

The video also highlights the broader context of AI research, noting the rapid pace of advancements and raising concerns about privacy in the age of AI-powered data scraping. It introduces Delete Me, a service that helps users protect their personal data by scanning the web, submitting removal requests to data brokers, and continuously monitoring for new listings. This sponsorship segment underscores the importance of safeguarding personal information as AI technologies become more capable of collecting and analyzing vast amounts of data effortlessly.

Technically, CTM differs from traditional recurrent neural networks by using complex neuron-level learners that incorporate histories of activations, allowing for more dynamic and time-sensitive responses. The model’s internal clock ticks forward, enabling it to track past activations and focus attention dynamically. Inputs are first processed through feature extractors and attention layers before being passed to a synapse model, which integrates current inputs with previous neuron activations. This synapse model acts as a communication hub, redistributing signals to individual neuron-level models (NLMs) that maintain a short memory of recent activations to generate new post-activations.

A key innovation in CTM is the synchronization step, where pairs of neurons’ activation histories are compared to quantify how their activity patterns evolve relative to each other over time. This synchronization data forms a latent representation that captures temporal relationships more effectively than static activations alone. The model uses this representation to generate outputs and update attention queries for subsequent ticks. By running for multiple ticks, CTM produces a series of outputs that are combined to form a robust final prediction, reflecting a deeper understanding developed through iterative thought processes.

Finally, the video explains how CTM handles initialization at the first tick, where no historical data exists, by optimizing initial values during training rather than relying on random initialization. The presenter encourages viewers interested in cutting-edge AI research to subscribe to his newsletter for more in-depth paper breakdowns. The video closes with acknowledgments to supporters and a reminder to follow on social media, emphasizing the exciting potential of biologically inspired AI models like CTM to advance the field significantly.