The text introduces xLSTM, an Extended Long Short-Term Memory concept developed by Zep Hiter’s team to enhance traditional LSTM models using insights from Transformer architectures. The paper presents two new variations of LSTM, SLSTM and MLSTM, aimed at addressing limitations and improving performance in language modeling tasks through experimental evaluations and ongoing optimization efforts.

The text discusses the concept of xLSTM, which stands for Extended Long Short-Term Memory, introduced by a team led by Zep Hiter. The paper explores pushing the boundaries of recurrent architectures, specifically LSTM, in comparison to Transformer-based models for language modeling. It delves into the historical significance of LSTM, highlighting their impact and widespread use in various domains despite being invented in the '90s. The authors aim to enhance LSTM using insights from modern deep learning techniques like Transformers.

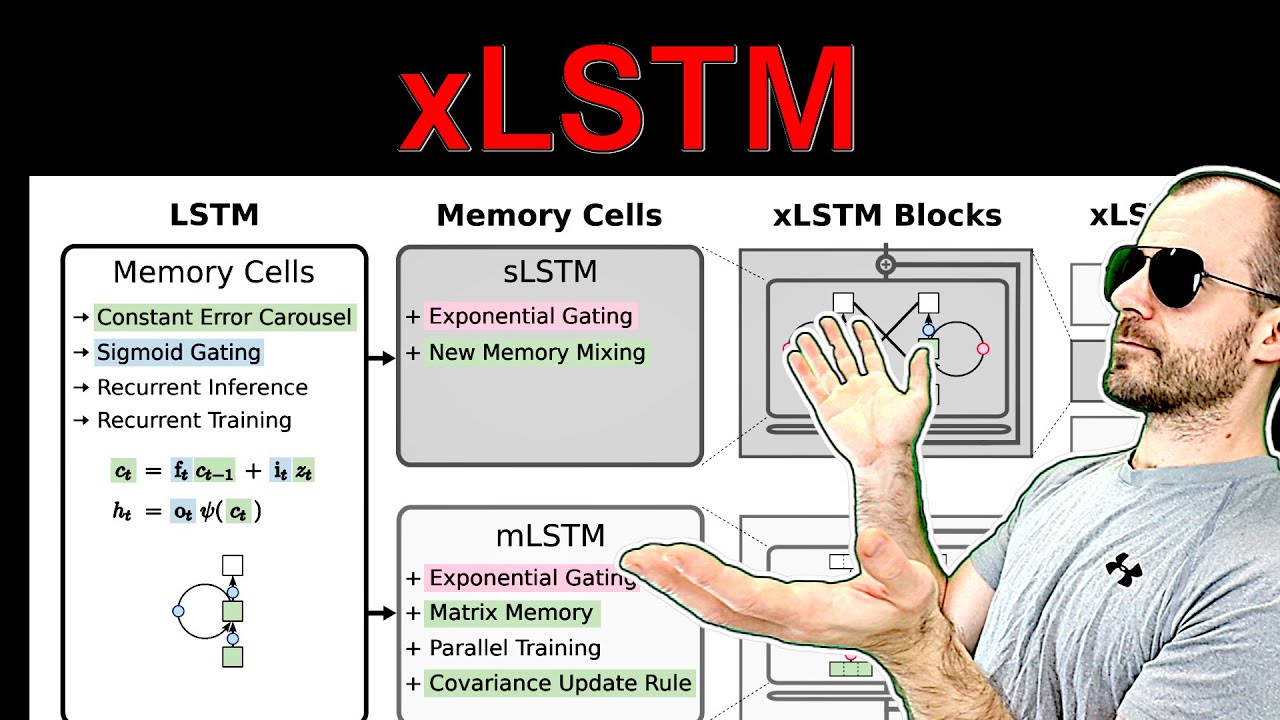

The paper introduces two new variations of LSTM: SLSTM and MLSTM. SLSTM modifies traditional LSTM by replacing nonlinearities with exponential functions and introducing new memory mixing techniques. On the other hand, MLSTM focuses on associative memory usage and parallel training, extending LSTM’s memory capacity without introducing additional parameters. SLSTM and MLSTM architectures are designed to address limitations of traditional LSTM models and leverage insights from Transformer architectures.

The authors conduct experimental evaluations to compare the performance of xLSTM models against existing baselines on various tasks. While the results indicate competitive performance, there is ongoing work to refine and optimize the models further. The limitations of xLSTM include computational complexity, especially when scaling up to larger models, and challenges in achieving fast parallel training due to recurrent connections.

In conclusion, the paper aims to answer the question of how far LSTM architectures can be scaled for language modeling compared to Transformer models. The xLSTM models show promising performance and potential but require further optimization and exploration. The authors plan to update the paper with improved baseline comparisons and release the code and models for wider experimentation and adoption in the research community. Overall, xLSTM presents a novel approach to enhancing LSTM architectures with modern deep learning techniques.