The video exposes how AI systems are deliberately used by insurance companies to deny medical treatments and claims, prioritizing profit over patient care and often overruling doctors’ recommendations. It highlights the ethical concerns and financial incentives behind these practices while encouraging patients to use AI tools to appeal denials and advocate for fair healthcare treatment.

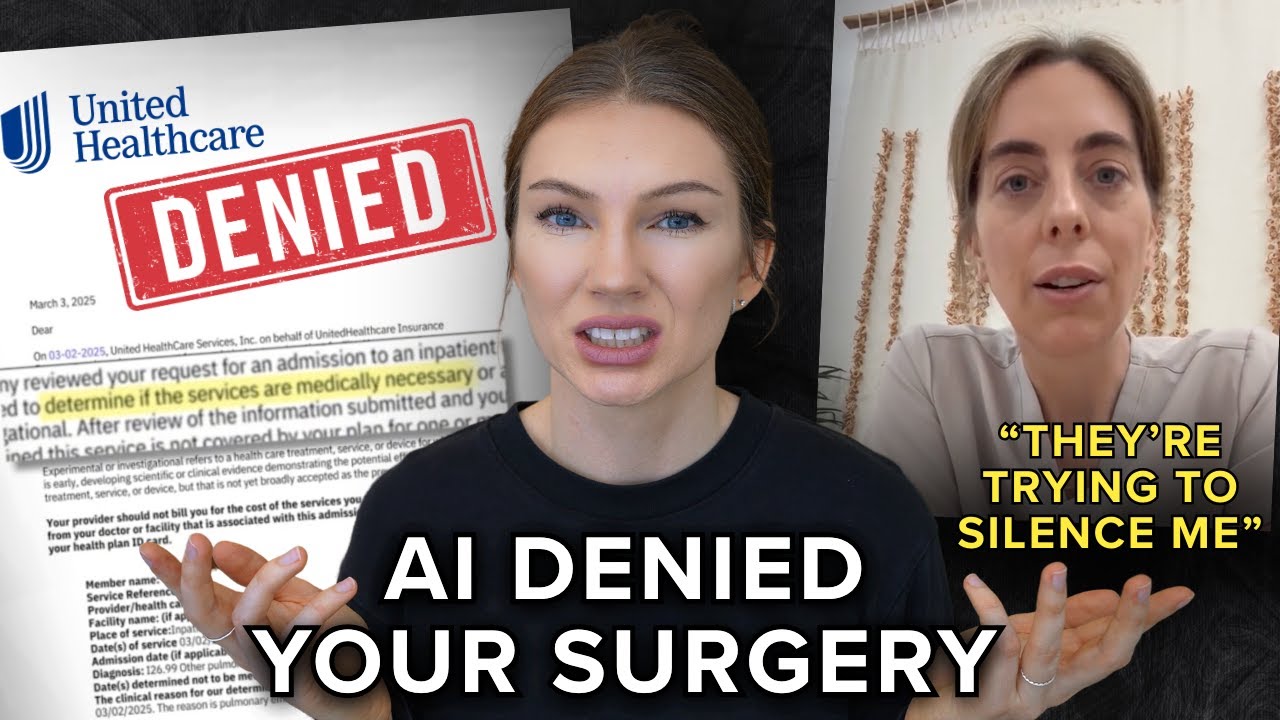

The video discusses the growing and concerning role of artificial intelligence (AI) in healthcare insurance, particularly in the denial of medical treatments and claims. Patients often face insurance denials for necessary procedures, such as MRIs or inpatient stays, based on AI assessments that determine whether treatments are “medically necessary.” These AI systems analyze vast amounts of data to make rapid decisions, often overruling doctors’ recommendations. The video highlights that this practice is not a glitch but an intentional design to minimize payouts and maximize profits for insurance companies.

Insurance companies use AI at multiple stages: underwriting policies, setting prices, and deciding on claim approvals. The AI systems are designed to deny as many claims as possible, leading to significant hardships for patients. For example, a 91-year-old man named Gene Lockan had his rehabilitation coverage cut short by AI decisions, forcing his family to pay exorbitant out-of-pocket costs. Investigations reveal that insurance companies and their AI partners, such as EVCore and NaviHealth, manipulate algorithms to increase denial rates, sometimes boasting about raising denials by 15%. This practice effectively determines who receives care and who does not, raising ethical concerns about AI’s role in life-or-death decisions.

The video also exposes how insurance companies outsource these AI decisions to third-party firms they often own, allowing them to control and tweak denial algorithms to save money. Human doctors employed by insurers rarely override AI decisions because doing so can risk their jobs. Many claims are reviewed in mere seconds, with doctors simply clicking to approve denials suggested by AI. This leads to a “sentinel effect,” where doctors stop requesting certain procedures because they expect denials, negatively impacting patient care. The video contrasts this with traditional Medicare, which has fewer prior authorization requirements, but warns that Medicare Advantage plans, run by private insurers, increasingly use AI denial systems.

A particularly troubling aspect is the introduction of AI-driven prior authorization requirements into Medicare, a government program funded by taxpayers. Pilot programs in several states aim to reduce waste by using AI to approve or deny treatments, with companies paid a percentage of the money saved through denials. This creates a financial incentive to deny care, potentially at the expense of patient health. Despite numerous lawsuits and regulatory violations, penalties against these companies are minimal compared to their profits, allowing the problematic system to persist.

The video concludes by encouraging patients to appeal insurance denials, noting that many are now using generative AI tools to help navigate the complex medical and legal language involved in appeals. While AI is often part of the problem in healthcare insurance, it can also be part of the solution by empowering patients to fight back. The overarching message is a warning about the dangers of relying on AI systems that prioritize cost-cutting over patient well-being and a call for greater awareness and advocacy to ensure fair treatment in healthcare.