The video benchmarks an HP Z440 AI server upgraded with an NVIDIA GeForce RTX 3090 against a previous dual RTX 3060 setup, demonstrating that the 3090 significantly outperforms the dual GPUs in processing speed and efficiency for various AI models. The host highlights the 3090’s superior VRAM capacity and processing power, making it a better choice for demanding AI applications, especially with larger models.

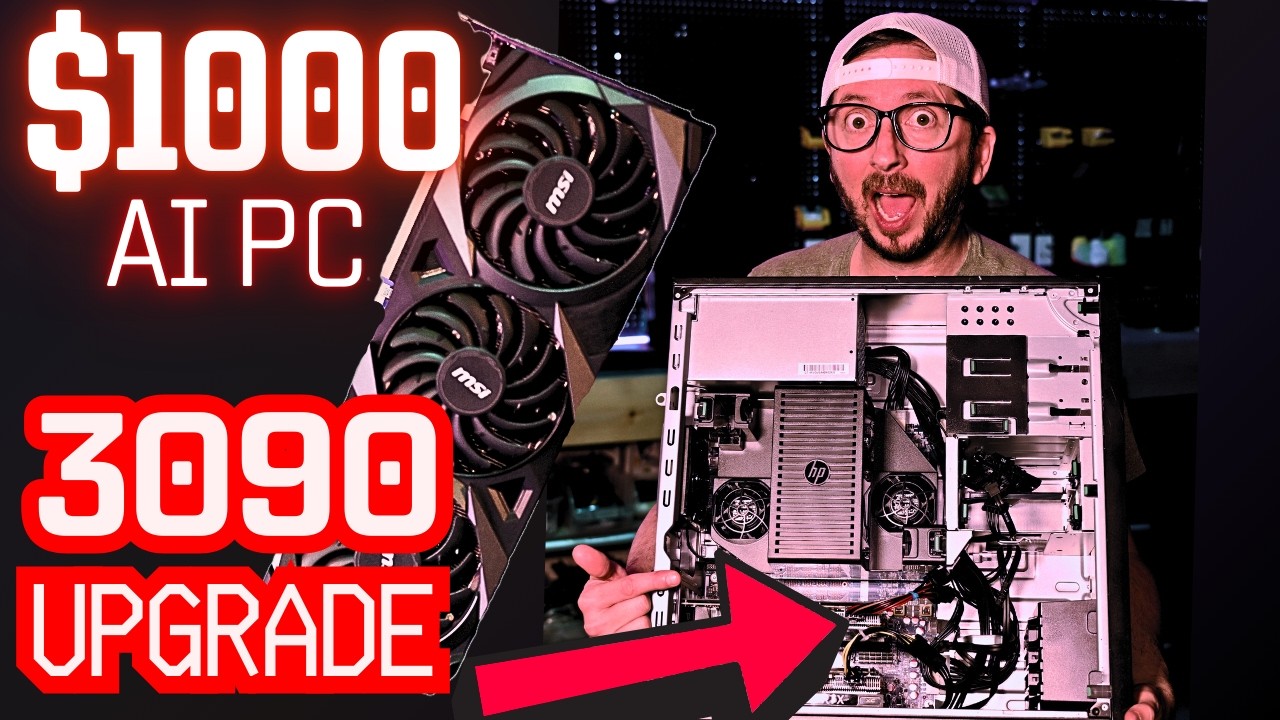

In the video, the host explores the performance of an HP Z440 AI server upgraded with an NVIDIA GeForce RTX 3090, comparing it to a previous setup featuring dual RTX 3060 GPUs. The primary focus is to determine how the single 3090 performs against the dual 3060s in various AI model benchmarks. The host notes that the Z440’s power envelope can support the 3090, which has a higher VRAM capacity of 24 GB compared to the 12 GB of each 3060, allowing for a direct performance comparison across the same models and contexts.

The testing begins with the host running various AI models, starting with a simple query to gauge the 3090’s performance. The results show a significant increase in tokens processed per second when using the 3090, indicating a faster response time and better overall performance. The host emphasizes that the 3090 maintains a high velocity in processing, which is crucial for AI applications. As the testing progresses, the host runs multiple models, including Gemma and Deepcoder, and consistently finds that the 3090 outperforms the dual 3060s in terms of both response and prompt tokens per second.

As the benchmarks continue, the host highlights some surprising results, particularly with larger models like Gemma 3 27B, where the performance difference between the 3090 and dual 3060s is stark. The 3090 achieves nearly 10 times the prompt tokens per second compared to the dual 3060s in some cases, showcasing its superior capability for handling larger AI models. The video also discusses the implications of these findings for users considering which GPU setup to choose for AI tasks, particularly emphasizing the advantages of the 3090 for more demanding applications.

The host also addresses some viewer questions from previous videos, such as how to update the LXE container running the community helper scripts and the importance of managing power consumption when using high-wattage GPUs. The video provides insights into the stability of the Z440 system with the 3090 installed, noting that it can handle the power requirements without crashing, as long as users are mindful of the total wattage being drawn from the system.

In conclusion, the video serves as a comprehensive benchmark comparison between the RTX 3090 and dual RTX 3060s in an HP Z440 server setup. The host encourages viewers to consider the performance benefits of the 3090 for AI applications, especially when working with larger models. The video wraps up with a call to action for viewers to engage with the content through likes, shares, and comments, while also hinting at future content that will explore mixing GPUs and optimizing AI inference setups.